opengl proamming guide chapter 12——Compute Shaders

compute shaders run in a completely separate stage of the gpu than the rest of the graphics pipeline. they allow an application to make use of the power of the gpu for general purpose work that may or may not be related to graphics. compute shaders have access to many of the same resources as graphics shaders, but have more control over their application flow and how they execute. this chapter introduces the compute shader and describes its use.

this chapter has the following major sections:

- “overview” gives a brief introduction to compute shaders and outlines their general operation.

- the organization and detailed working of compute shaders with regards to the graphics processor is given in “workgroups and dispatch”.

- next, methods for communicating between the individual invocations of a compute shader are presented in “Communication and Synchronization”, along with the synchronization mechanisms that can be used to control the flow of data between those invocations.

- a few examples of compute shaders are shown, including both graphics and nongraphics work are given in “exmaples”.

overview

the graphics processor is an immensely powerful device capable of performing trillions of calculations each second. over the years, it has been developed to crunch the huge amount of math operations required to render real-time graphics. however, it is possible to use the computational power of the processor for tasks that are not considered graphics, or that do not fit nearly into the relatively fixed graphical pipeline. to enable this type of use, opengl includes a special shader stage called the compute shader. The compute shader can be considered a special, single-stage pipeline that has no fixed input or output. Instead, all automatic input is through a handful of built-in variables. If additional input is needed, those fixed-function inputs may be used to control access to textures and buffers.

All visible side effects are through image stores, atomics, and access to atomic counters.

While at first this seems like it would be quite limiting, it includes general read and write of memory, and this level of flexibility and lack of graphical idioms open up a wide range of applications for compute shaders. Compute shaders in OpenGL are very similar to any other shader stage.

They are created using the glCreateShader() function, compiled using glCompileShader(), and attached to program objects using glAttachShader(). These programs are linked as normal by using glLinkProgram(). Compute shaders are written in GLSL and in general, any functionality accessible to normal graphics shaders (for example, vertex, geometry or fragment shaders) is available. Obviously, this excludes graphics pipeline functionality such as the geometry shaders’ EmitVertex() or EndPrimitive(), or to the similarly pipeline-specific built-in variables. On the other hand, several built-in functions and variables are available to a compute shader that are available nowhere else in the OpenGL pipeline.

workgroups and dispatch

just as the graphics shaders fit into the pipeline at specific points and operate on graphics-specific elements, compute shaders effectively fit into the (single-stage) compute pipeline and operate on compute-specific elements.

in this analogy, vertex shaders execute per vertex, geometry shaders execute per primitive and fragment shaders execute per fragment.

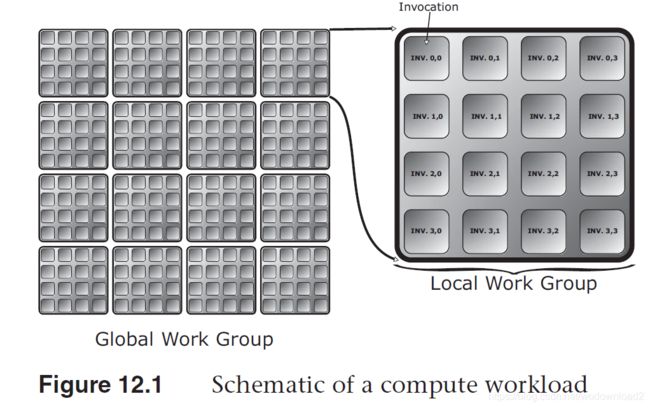

performance of graphics hardware is obtained through parallelism, which in turn is achieved through the very large number of vertices, primitives, or fragments, respectively, passing through each stage of the pipeline, in the context of compute shaders, this parallelism is more explicit, with work being launched in groups known as workgroups. workgroups have a local neighborhood known as a local workgroup, and these are again grouped to form a global workgroup as the result of one of the dispatch commands.

The compute shader is then executed once for each element of each local workgroup within the global workgroup. Each element of the workgroup is known as a work item and is processed by an invocation. The invocations of the compute shader can communicate with each other via variables and memory, and can perform synchronization operations to keep their work coherent. Figure 12.1 shows a schematic of this work layout. In this simplified example, the global workgroup consists of 16 local workgroups, and each local workgroup consists of 16 invocations, arranged in a 4 × 4 grid. Each invocation has a local index that is a two-dimensional vector.

While Figure 12.1 visualizes the global and local workgroups as twodimensional entities, they are in fact in three dimensions. To issue work

that is logically one- or two-dimensional, we simply make a three-dimensional work size where the extent in one or two of the dimensions is of size one. The invocations of a compute shader are essentially independent and may run in parallel on some implementations of OpenGL. In practice, most OpenGL implementations will group subsets of the work together and run it in lockstep, grouping yet more of these subsets together to form the local workgroups.

The size of a local workgroups is defined in the compute shader source code using an input layout qualifier. the global workgroup size is measoured as an integer multiple of the local workgroup size. as the compute shader executes, it is provided with its location within the local workgroup, the size of the workgroup, and the location of its local workgroup within the global workgroup through built-in variables. There are further variables available that are derived from these providing the location of the invocation within the global workgroup, among other things. The shader may use these variables to determine which elements of the computation it should work on and also can know its neighbors within the workgroup, which facilitates some amount of data sharing.

The input layout qualifiers that are used in the compute shader to declare the local workgroup size are local_size_x, local_size_y, and

local_size_z. The defaults for these are all one, and so omitting local_size_z, for example, would create an N × M two-dimensional

workgroup size. An example of declaring a shader with a local workgroup size of 16 × 16 is shown in Example 12.1.

Example 12.1 Simple Local Workgroup Declaration

#version 430 core

// Input layout qualifier declaring a 16 x 16 (x 1) local

// workgroup size

layout (local_size_x = 16, local_size_y = 16) in;

void main(void)

{

// Do nothing.

}

Although the simple shader of Example 12.1 does nothing, it is a valid compute shader and will compile, link, and execute on an OpenGL

implementation. To create a compute shader, simply call glCreateShader() with type set to GL_COMPUTE_SHADER, set the shader’s source code with glShaderSource() and compile it as normal. Then, attach the shader to a program and call glLinkProgram(). This creates the executable for the compute shader stage that will operate on the work items. A complete example of creating and linking a compute program1 is shown in Example 12.2.

Example 12.2 Creating, Compiling, and Linking a Compute Shader

GLuint shader, program;

static const GLchar* source[] =

{

"#version 430 core\n"

"\n"

"// Input layout qualifier declaring a 16 x 16 (x 1) local\n"

"// workgroup size\n"

"layout (local_size_x = 16, local_size_y = 16) in;\n"

"\n"

"void main(void)\n"

"{\n"

" // Do nothing.\n"

"}\n"

};

shader = glCreateShader(GL_COMPUTE_SHADER);

glShaderSource(shader, 1, source, NULL);

glCompileShader(shader);

program = glCreateProgram();

glAttachShader(program, shader);

glLinkProgram(program);

Once we have created and linked a compute shader as shown in Example 12.2, we can make the program current using glUseProgram() and then dispatch workgroups into the compute pipeline using the function glDispatchCompute(), whose prototype is as follows:

void glDispatchCompute(GLuint num_groups_x, GLuint num_groups_y, GLuint num_groups_z);

Dispatch compute workgroups in three dimensions. num_groups_x, num_groups_y, and num_groups_z specify the number of workgroups to launch in the X, Y, and Z dimensions, respectively. Each parameter must be greater than zero and less than or equal to the corresponding element of the implementation-dependent constant vector GL_MAX_COMPUTE_WORK_GROUP_SIZE.

When you call glDispatchCompute(), OpenGL will create a three-dimensional array of local workgroups whose size is num_groups_x by

num_groups_y by num_groups_z groups. Remember, the size of the workgroup in one or more of these dimensions may be one, as may be any of the parameters to glDispatchCompute(). Thus the total number of invocations of the compute shader will be the size of this array times the size of the local workgroup declared in the shader code. As you can see, this can produce an extremely large amount of work for the graphics processor and it is relatively easy to achieve parallelism using compute shaders.

As glDrawArraysIndirect() is to glDrawArrays(), so glDispatchComputeIndirect() is to glDispatchCompute(). glDispatchComputeIndirect() launches compute work using parameters stored in a buffer object. The buffer object is bound to the GL_DISPATCH_INDIRECT_BUFFER binding point and the parameters stored in the buffer consist of three unsigned integers, tightly packed together. Those three unsigned integers are equivalent to the parameters to glDispatchCompute(). The prototype for glDispatchComputeIndirect() is as follows:

void glDispatchComputeIndirect(GLintptr indirect);

Dispatch compute workgroups in three dimensions using parameters stored in a buffer object. indirect is the offset, in basic machine units, into the buffer’s data store at which the parameters are located. The parameters in the buffer at this offset are three, tightly packed unsigned integers representing the number of local workgroups to be dispatch. These unsigned integers are equivalent to the num_groups_x, num_groups_y, and num_groups_z parameters to glDispatchCompute(). Each parameter must be greater than zero and less than or equal to the corresponding element of the implementation-dependent constant vector GL_MAX_COMPUTE_WORK_GROUP_SIZE.

The data in the buffer bound to GL_DISPATCH_INDIRECT_BUFFER binding could come from anywhere—including another compute shader. As such, the graphics processor can be made to feed work to itself by writing the parameters for a dispatch (or draws) into a buffer object. Example 12.3 shows an example of dispatching compute workloads using glDispatchComputeIndirect().

Example 12.3 Dispatching Compute Workloads

// program is a successfully linked program object containing

// a compute shader executable

GLuint program = ...;

// Activate the program object

glUseProgram(program);

// Create a buffer, bind it to the DISPATCH_INDIRECT_BUFFER binding point and fill it with some data.

glGenBuffers(1, &dispatch_buffer);

glBindBuffer(GL_DISPATCH_INDIRECT_BUFFER, dispatch_buffer);

static const struct

{

GLuint num_groups_x;

GLuint num_groups_y;

GLuint num_groups_z;

} dispatch_params = { 16, 16, 1 };

glBufferData(GL_DISPATCH_INDIRECT_BUFFER, sizeof(dispatch_params), &dispatch_params, GL_STATIC_DRAW);

// Dispatch the compute shader using the parameters stored in the buffer object

glDispatchComputeIndirect(0);

Notice how in Example 12.3 we simply use glUseProgram() to set the current program object to the compute program. Aside from having no access to the fixed-function graphics pipeline (such as the rasterizer or framebuffer), compute shaders and the programs that they are linked into are completely normal, first-class shader and program objects. This means that you can use glGetProgramiv() to query their properties (such as active uniform or storage blocks) and can access uniforms as normal. Of course, compute shaders also have access to almost all of the resources that other types shaders have, including images, samplers, buffers, atomic counters, and uniform blocks.

Compute shaders and their linked programs also have several computespecific properties. For example, to retrieve the local workgroup size of a compute shader (which would have been set using a layout qualifier in the source of the compute shader), call glGetProgramiv() with pname set to GL_MAX_COMPUTE_WORK_GROUP_SIZE and param set to the address of an array of three unsigned integers. The three elements of the array will be filled with the size of the local workgroup size in the X, Y, and Z dimensions, in that order.

Knowing Where You Are

Once your compute shader is executing, it likely has the responsibility to set the value of one or more elements of some output array (such as an image or an array of atomic counters), or to read data from a specific location in an input array. To do this, you will need to know where in the local workgroup you are and where that workgroup is within the larger global workgroup. For these purposes, OpenGL provides several built-in variables to compute shaders. These built-in variables are implicitly declared as shown in Example 12.4.

Example 12.4 Declaration of Compute Shader Built-in Variables

const uvec3 gl_WorkGroupSize;

in uvec3 gl_NumWorkGroups;

in uvec3 gl_LocalInvocationID;

in uvec3 gl_WorkGroupID;

in uvec3 gl_GlobalInvocationID;

in uint gl_LocalInvocationIndex;

The compute shader built-in variables have the following definitions:

• gl_WorkGroupSize is a constant that stores the size of the local workgroup as declared by the local_size_x, local_size_y and

local_size_z layout qualifiers in the shader. Replicating this information here serves two purposes; first, it allows the workgroup

size to be referred to multiple times in the shader without relying on the preprocessor and second, it allows multidimensional workgroup

size to be treated as a vector without having to construct it explicitly.

• gl_NumWorkGroups is a vector that contains the parameters that were passed to glDispatchCompute() (num_groups_x, num_groups_y, and num_groups_z). This allows the shader to know the extent of the global workgroup that it is part of. Besides being more convenient than needing to set the values of uniforms by hand, some OpenGL implementations may have a very efficient path for setting these

constants.

• gl_LocalInvocationID is the location of the current invocation of a compute shader within the local workgroup. It will range from

uvec3(0) to gl_WorkGroupSize - uvec3(1).

• gl_WorkGroupID is the location of the current local workgroup within the larger global workgroup. This variable will range from uvec3(0) to

gl_NumWorkGroups - uvec3(1).

• gl_GlobalInvocationID is derived from gl_LocalInvocationID, gl_WorkGroupSize, and gl_WorkGroupID. Its exact value is equal to

gl_WorkGroupID * gl_WorkGroupSize + gl_LocalInvocationID and as such, it is effectively the three-dimensional index of the current

invocation within the global workgroup.

• gl_LocalInvocationIndex is a flattened form of gl_LocalInvocationID. It is equal to gl_LocalInvocationID.z *

gl_WorkGroupSize.x * gl_WorkGroupSize.y + gl_LocalInvocationID.y * gl_WorkGroupSize.x + gl_LocalInvocationID.x. It can be used to index into one-dimensional arrays that represent two- or three-dimensional data.

Given that we now know where we are within both the local workgroup and the global workgroup, we can use this information to operate on data. Taking the example of Example 12.5 and adding an image variable allows us to write into the image at a location derived from the coordinate of the invocation within the global workgroup and update it from our compute shader. This modified shader is shown in Example 12.5.

Example 12.5 Operating on Data

#version 430 core

layout (local_size_x = 32, local_size_y = 16) in;

// An image to store data into.

layout (rg32f) uniform image2D data;

void main(void)

{

// Store the local invocation ID into the image.

imageStore(data, ivec2(gl_GlobalInvocationID.xy), vec4(vec2(gl_LocalInvocationID.xy) / vec2(gl_WorkGroupSize.xy), 0.0, 0.0));

}

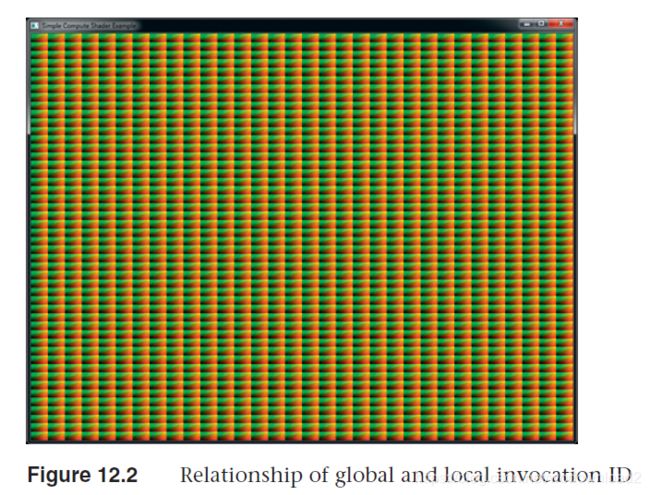

The shader shown in Example 12.5 simply takes the local invocation index, normalizes it to the local workgroup size, and stores the result into the data image at the location given by the global invocation ID. The resulting image shows the relationship between the global and local invocation IDs and clearly shows the rectangular local workgroup size specified in the compute shader (in this case, 32 × 16 work items). The resulting image is shown in Figure 12.2.

To generate the image of Figure 12.2, after being written by the compute shader, the texture is simply rendered to a full screen triangle fan.

communication and synchronization

when u call glDispatchCompute() (or glDispatchComputeIndirect()), a potentially huge amount of work is sent to the graphics processor. the graphics processor will run that work in parallel if it can, and the invocations that execute the compute shader can be considered to be a team trying to accomplish a task. teakwork is facilitated greatly by communication an so, while the order of execution and level of parallelism is not defined by opengl, some level of cooperation between the invocations is enabled by allowing them to communicate via shared variables. furthermore, it is possible to sync up all the invocations in the local workgroup so that they reach the same part of your shader at the same time.

communication 使用共享的关键字shared进行通信

the shared keyword is used to declare variables in shaders in a similar manner to other keywords such as uniform, in or out. Some example declarations using the shared keyword are shown in Example 12.6.

Example 12.6 Example of Shared Variable Declarations

// A single shared unsigned integer;

shared uint foo;

// A shared array of vectors

shared vec4 bar[128];

// A shared block of data

shared struct baz_struct

{

vec4 a_vector;

int an_integer;

ivec2 an_array_of_integers[27];

} baz[42];

when a variable is declared as shared, that means it will be kept in storage that is visible to all of the compute shader invocations in the same local workgroup. when one invocation of the compute shader writes to a shared variable, then the data it wrote will eventually become visible to other invocations of that shader within the same local workgroup. we say eventually because the relative order to execution of compute shader invocations is not defined——even within the same local workgroup. therefore, one shader invocation may write to a shared variable long before another invocation reads from that variable, or even long after the other invocation has read from that variable. to ensure that u get the results u expect, u need to include some synchronization primitives in your code. these are covered in detail in the next section.

the performance of access to shared variables is often significantly better than access to images or to shader storage buffers (i.e., main memory). as shared memory is local to a shader processor and may be duplicated throughout device, access to shared variables can be even faster than hitting the cache. for this reason, it is recommended that if your shader performs more than a few access to a region of memory, and especially if multiple shader invocations will access the same memory locations, that u first copy that memory into some shared variables in the shader, operate on them there, and then write the results back into main memory if required.

because it is expected that variables declared as shared will be stored inside the graphics processor in dedicated high-performance resources, and because those resources may be limited, it is possible to query the combined maximum size of all shared variables that can be accessed by a single compute program. to retrieve this limit, call glGetIntegerv() with pname set of GL_MAX_COMPUTE_SHARED_MEMORY_SIZE.

synchronization

If the order of execution of the invocations of a local workgroup and all of the local workgroups that make up the global workgroup are not defined, the operations that an invocation performs can occur out of order with respect to other invocations. If no communication between the invocations is required and they can all run completely independently of each other, then this likely isn’t going to be an issue. However, if the invocations need to communicate with each other either through images and buffers or through shared variables, then it may be necessary to synchronize their operations with each other.

there are two types of synchronization commands. the first is an execution barrier, which is invoked usign the barrier() function. this is similar to the barrier() function u can use in a tessellation control shader to synchronize the invocations that are processing the control points. when an invocation of a compute shader reaches a call to barrier(), it will stop executing and wait for other invocations within the same local workgroup to catch up. once the invocation resumes executing, having returned from the call to barrier(), it is safe to assume that all other invocations have also reached their corresponding call to barrier(), and have completed any operations that they performed before this call. the usage of barrier() in a compute shader is somewhat more flexible than what is allowed in a tessellation control shader. in particular, there is no requirement that barrier() be called only from the shader’s main() function. calls to barrier() must, however, only be executed inside uniform flow control.

that is, if one invocation within a local workgroup executes a barrier() function, then all invocations within that workgroup must also execute the same all. this seems logical as one invocation of the shader has no knowledge of the control flow of any other and must assume that the other invocations will eventually reach the barrier——if they do not, then deadlock can occur.

When communicating between invocations within a local workgroup, you can write to shared variables from one invocation and then read from them in another. However, you need to make sure that by the time you read from a shared variable in the destination invocation that the source invocation has completed the corresponding write to that variable. To

ensure this, you can write to the variable in the source invocation, and then in both invocations execute the barrier() function. When the destination invocation returns from the barrier() call, it can be sure that the source invocation has also executed the function (and therefore completed the write to the shared variable), and so it is safe to read from the variable.

examples

This section includes a number of example use cases for compute shaders. As compute shaders are designed to execute arbitrary work with very little fixed-function plumbing to tie them to specific functionality, they are very flexible and very powerful. As such, the best way to see them in action is to work through a few examples in order to see their application in real-world scenarios.

Physical Simulation

The first example is a simple particle simulator. In this example, we use a compute shader to update the positions of close to a million particles in real time. Although the physical simulation is very simple, it produces visually interesting results and demonstrates the relative ease with which this type of algorithm can be implemented in a compute shader.

The algorithm implemented in this example is as follows. Two large buffers are allocated, one which stores the current velocity of each particle and a second which stores the current position. At each time step, a compute shader executes and each invocation processes a single particle. The current velocity and position are read from their respective 各自的 buffers. A new velocity is calculated for the particle and then this velocity is used to update the particle’s position. The new velocity and position are then written back into the buffers. To make the buffers accessible to the shader, they are attached to buffer textures that are then used with image load and store operations. An alternative to buffer textures is to use shader storage

buffers, declared with as a buffer interface block. In this toy example, we don’t consider the interaction of the particles with

each other, which would be an O(n2) problem. Instead, we use a small number of attractors, each with a position and a mass. The mass of each particle is also considered to be the same. Each particle is considered to be gravitationally attracted to the attractors. The force exerted 施加 on the particle by each of the attractors is used to update the velocity of the particle by integrating over time. The positions and masses of the attractors are stored in a uniform block. In addition to a position and velocity, the particles have a life expectancy. The life expectancy of the particle is stored in the w component

of its position vector and each time the particle’s position is updated, its life expectancy is reduced slightly. Once its life expectancy is below a small threshold, it is reset to one, and rather than update the particle’s position,

we reset it to be close to the origin. We also reduce the particle’s velocity by two orders of magnitude. This causes aged particles (including those that may have been flung to the corners of the universe) to reappear at the center, creating a stream of fresh young particles to keep our simulation going.

The source code for the particle simulation shader is given in Example 12.7.

Example 12.7 Particle Simulation Compute Shader

#version 430 core

// Uniform block containing positions and masses of the attractors

layout (std140, binding = 0) uniform attractor_block

{

vec4 attractor[64]; // xyz = position, w = mass

};

// Process particles in blocks of 128

layout (local_size_x = 128) in;

// Buffers containing the positions and velocities of the particles

layout (rgba32f, binding = 0) uniform imageBuffer velocity_buffer;

layout (rgba32f, binding = 1) uniform imageBuffer position_buffer;

// Delta time

uniform float dt;

void main(void)

{

// Read the current position and velocity from the buffers

vec4 vel = imageLoad(velocity_buffer, int(gl_GlobalInvocationID.x));

vec4 pos = imageLoad(position_buffer, int(gl_GlobalInvocationID.x));

int i;

// Update position using current velocity * time

pos.xyz += vel.xyz * dt;

// Update "life" of particle in w component

pos.w -= 0.0001 * dt;

// For each attractor...

for (i = 0; i < 4; i++)

{

// Calculate force and update velocity accordingly

vec3 dist = (attractor[i].xyz - pos.xyz);

vel.xyz += dt * dt * attractor[i].w * normalize(dist) / (dot(dist, dist) + 10.0);

}

// If the particle expires, reset it

if (pos.w <= 0.0)

{

pos.xyz = -pos.xyz * 0.01;

vel.xyz *= 0.01;

pos.w += 1.0f;

}

// Store the new position and velocity back into the buffers

imageStore(position_buffer, int(gl_GlobalInvocationID.x), pos);

imageStore(velocity_buffer, int(gl_GlobalInvocationID.x), vel);

}

to kick off 开始,开球 the simulation, we first create two buffer objects that will store the positions and velocities of all of the particles. the position of each particle is set to a random location in the vicinity 附近 of the origin and its life expectancy is set to random value between zero and one. this means that each particle will reach the end of its first iteration and be brought back to the origin after a random amount of time. the velocity of each particle is also initialized to a random vector with a small magnitude. the code to do this is shown in example 12.8.

Example 12.8 Initializing Buffers for Particle Simulation

// Generate two buffers, bind them and initialize their data stores

glGenBuffers(2, buffers);

glBindBuffer(GL_ARRAY_BUFFER, position_buffer);

glBufferData(GL_ARRAY_BUFFER, PARTICLE_COUNT * sizeof(vmath::vec4), NULL, GL_DYNAMIC_COPY);

// Map the position buffer and fill it with random vectors

vmath::vec4 * positions = (vmath::vec4 *) glMapBufferRange(GL_ARRAY_BUFFER, 0, PARTICLE_COUNT * sizeof(vmath::vec4),

GL_MAP_WRITE_BIT |GL_MAP_INVALIDATE_BUFFER_BIT);

for (i = 0; i < PARTICLE_COUNT; i++)

{

positions[i] = vmath::vec4(random_vector(-10.0f, 10.0f),

random_float());

}

glUnmapBuffer(GL_ARRAY_BUFFER);

// Initialization of the velocity buffer - also filled with random vectors

glBindBuffer(GL_ARRAY_BUFFER, velocity_buffer);

glBufferData(GL_ARRAY_BUFFER,

PARTICLE_COUNT * sizeof(vmath::vec4),

NULL,

GL_DYNAMIC_COPY);

vmath::vec4 * velocities = (vmath::vec4 *) glMapBufferRange(GL_ARRAY_BUFFER, 0, PARTICLE_COUNT * sizeof(vmath::vec4), GL_MAP_WRITE_BIT |GL_MAP_INVALIDATE_BUFFER_BIT);

for (i = 0; i < PARTICLE_COUNT; i++)

{

velocities[i] = vmath::vec4(random_vector(-0.1f, 0.1f), 0.0f);

}

glUnmapBuffer(GL_ARRAY_BUFFER);

the masses of the attractors are also set to random numbers between 0.5 and 1.0. their positions are initialized to zero, but these will be moved during the rendering loop. their masses 质量 are stored in a variable in the appliation because, as they are fixed, they need to be restored after each update of the uniform buffer containing the updated positions of the attractors. finally, the position buffer is attached to a vertex array object so that the particles can be rendered as points.

the rendering loop is quite simple. First, we execute the compute shader with sufficient invocations to update all of the particles. Then, we render all of the particles as points with a single call to glDrawArrays(). The shader vertex shader simply transforms the incoming vertex position by a perspective transformation matrix and the fragment shader outputs solid

white. The result of rendering the particle system as simple, white points is shown in Figure 12.3.

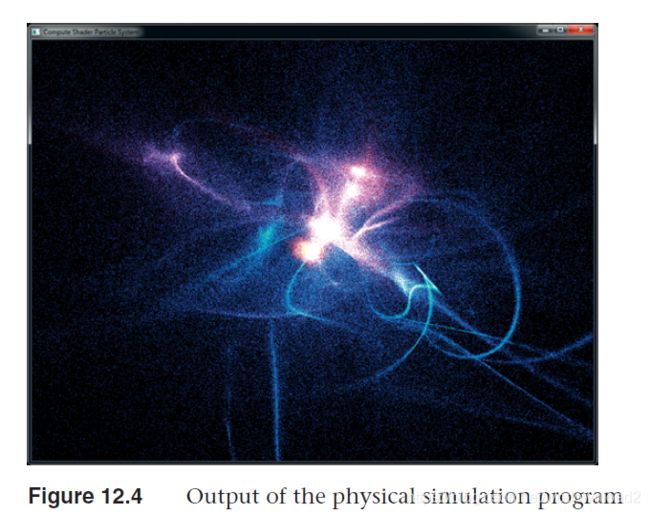

The initial output of the program is not terribly exciting. While it does demonstrate that the particle simulation is working, the visual complexity of the scene isn’t high. To add some interest to the output (this is a graphics API after all), we add some simple shading to the points.

In the fragment shader for rendering the points, we first use the age of the point (which is stored in its w component) to fade the point from red hot to cool blue as it gets older. Also, we turn on additive blending by enabling GL_BLEND and setting both the source and destination factors to GL_ONE. This causes the points to accumulate in the framebuffer and for

more densely populated areas to ‘‘glow’’ due to the number of particles in the region. The fragment shader used to do this is shown in Listing 12.9.

Example 12.9 Particle Simulation Fragment Shader

#version 430 core

layout (location = 0) out vec4 color;

// This is derived from the age of the particle read

// by the vertex shader

in float intensity;

void main(void)

{

// Blend between red-hot and cool-blue based on the

// age of the particle.

color = mix(vec4(0.0f, 0.2f, 1.0f, 1.0f),

vec4(0.2f, 0.05f, 0.0f, 1.0f), intensity);

}

in our rendering loop, the positions and masses of the attractors are updated before we dispatch the compute shader over the buffers containing the positions and velocities. We then render the particles as points having issued a memory barrier to ensure that the writes performed by the compute shader have been completed.

This loop is shown in Example 12.10.

// Update the buffer containing the attractor positions and masses

vmath::vec4 * attractors =

(vmath::vec4 *)glMapBufferRange(GL_UNIFORM_BUFFER,

0,

32 * sizeof(vmath::vec4),

GL_MAP_WRITE_BIT |

GL_MAP_INVALIDATE_BUFFER_BIT);

int i;

for (i = 0; i < 32; i++)

{

attractors[i] =vmath::vec4(sinf(time * (float)(i + 4) * 7.5f * 20.0f) * 50.0f,

cosf(time * (float)(i + 7) * 3.9f * 20.0f) * 50.0f,

sinf(time * (float)(i + 3) * 5.3f * 20.0f) *

cosf(time * (float)(i + 5) * 9.1f) * 100.0f,

attractor_masses[i]);

}

glUnmapBuffer(GL_UNIFORM_BUFFER);

// Activate the compute program and bind the position

// and velocity buffers

glUseProgram(compute_prog);

glBindImageTexture(0, velocity_tbo, 0,

GL_FALSE, 0,

GL_READ_WRITE, GL_RGBA32F);

glBindImageTexture(1, position_tbo, 0,

GL_FALSE, 0,

GL_READ_WRITE, GL_RGBA32F);

// Set delta time

glUniform1f(dt_location, delta_time);

// Dispatch the compute shader

glDispatchCompute(PARTICLE_GROUP_COUNT, 1, 1);

// Ensure that writes by the compute shader have completed

glMemoryBarrier(GL_SHADER_IMAGE_ACCESS_BARRIER_BIT);

// Set up our mvp matrix for viewing

vmath::mat4 mvp = vmath::perspective(45.0f, aspect_ratio,

0.1f, 1000.0f) *

vmath::translate(0.0f, 0.0f, -60.0f) *

vmath::rotate(time * 1000.0f,

vmath::vec3(0.0f, 1.0f, 0.0f));

// Clear, select the rendering program and draw a full screen quad

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glUseProgram(render_prog);

glUniformMatrix4fv(0, 1, GL_FALSE, mvp);

glBindVertexArray(render_vao);

glEnable(GL_BLEND);

glBlendFunc(GL_ONE, GL_ONE);

glDrawArrays(GL_POINTS, 0, PARTICLE_COUNT);

finally, the result of rendering the particle system with the fragment shader of example 12.9 and with blending turned on is shown in figure 12.4.

chapter summary

in this chapter, u have read an introduction to compute shaders. as they are not tied to a specific part of the traditional graphics pipeline and have no fixed intended use, the amount that could be written about compute shader is enormous. instead, we have covered the basics and provided a couple of examples that should demonstrate how compute shaders may be used to perform the nongraphics parts of your graphics applications.