DS-SLAM代码解析和问题整理 (一)

目录

- 资源

- 介绍

- ros_tum_realtime.cc

-

- Step 1.前期工作

-

- 1.1 定义了一个ROS的节点

- 1.2 从tum的associate.txt文件读取时间戳,RGB图像,时间戳,深度图像

- 1.3 检查RGB图像和深度图像

- 1.4 创建一个查看器

- Step 2.创建一个SLAM系统

-

- 2.1 创建SLAM系统

- 2.2 创建一个节点的句柄,并发布话题

- 2.3 对于所有图片

-

- 2.3.1 加载RGB图像,深度图和时间戳

- 2.3.2 检查图片是否加载成功

- 2.3.3 (重点关注)在一个while循环里面遍历数据集里面的每一幅图片,并将深度图imD,RGB图imRGB逐个发送到SLAM系统之中,并可以获得当前帧的位姿矩阵Tcw

- 2.3.4 记录每一帧图像处理的时间

- 2.3.5 (重点关注)通过ROS将位姿矩阵T发送到前面的3个Topic

- step 3 如果所有的图像都预测完了,那么终止当前的SLAM系统

- step 4 计算平均耗时

- step 5 保存TUM格式的相机轨迹

- 参考文献

资源

- DS-SLAM代码: https://github.com/ivipsourcecode/DS-SLAM

- DS-SLAM论文: https://arxiv.org/abs/1809.08379v1

介绍

-

DS-SLAM是一篇18年的论文,时间相对较早,论文的深度学习模型使用了caffe-segnet-cudnn5版本.(所以在安装DS-SLAM时最好使用9.0版本及以下的CUDA)

-

引用原文:本文提出了一种针对动态环境的语义视觉SLAM,称为DS-SLAM。DS-SLAM中有五个线程并行运行:跟踪,语义分割,局部建图,回环检测和稠密语义建图。DS-SLAM结合了语义分割网络和移动一致性检查方法,以减少动态对象的影响,从而在动态环境中大大提高了定位精度。同时,生成了密集的语义八叉树图,可用于高级任务。

-

所以我们在代码上主要关注Frame.cc, ORBextractor.cc(作者代码里说要关注ORBmatcher,但里面只有一些微小的改动,感觉是作者写错了), Pointcloudmapping.cc and Segment.cc这几个文件(点击进行跳转).

ros_tum_realtime.cc

我们首先从主函数开始,方便对于整个系统框架有个理解.

主函数主要做了 件事:

Step 1.前期工作

1.1 定义了一个ROS的节点

ros::init(argc, argv, "TUM");

1.2 从tum的associate.txt文件读取时间戳,RGB图像,时间戳,深度图像

LoadImages(strAssociationFilename, vstrImageFilenamesRGB, vstrImageFilenamesD, vTimestamps);

1.3 检查RGB图像和深度图像

if(vstrImageFilenamesRGB.empty())

{

cerr << endl << "No images found in provided path." << endl;

return 1;

}

else if(vstrImageFilenamesD.size()!=vstrImageFilenamesRGB.size())

{

cerr << endl << "Different number of images for rgb and depth." << endl;

return 1;

}

1.4 创建一个查看器

ORB_SLAM2::Viewer *viewer;

viewer = new ORB_SLAM2::Viewer();

Step 2.创建一个SLAM系统

2.1 创建SLAM系统

ORB_SLAM2::System SLAM(argv[1],argv[2], argv[5],argv[6],argv[7],ORB_SLAM2::System::RGBD, viewer);

2.2 创建一个节点的句柄,并发布话题

ros::NodeHandle nh; //创建一个节点的句柄

// 发布了消息类型为geometry_msgs::PoseStamped 的消息,话题为/Camera_Pose" ,消息队列的大小为1

CamPose_Pub = nh.advertise<geometry_msgs::PoseStamped>("/Camera_Pose",1);

// 发布了话题/Camera_Odom ,消息队列大小为1

Camodom_Pub = nh.advertise<geometry_msgs::PoseWithCovarianceStamped>("/Camera_Odom", 1);

// 发布了话题/odom ,消息队列大小为50

odom_pub = nh.advertise<nav_msgs::Odometry>("odom", 50);

2.3 对于所有图片

while(ros::ok()&&ni其中int nImages = vstrImageFilenamesRGB.size(); // 当前图像序列中的图像数目

2.3.1 加载RGB图像,深度图和时间戳

imRGB = cv::imread(string(argv[3])+"/"+vstrImageFilenamesRGB[ni],CV_LOAD_IMAGE_UNCHANGED);

imD = cv::imread(string(argv[3])+"/"+vstrImageFilenamesD[ni],CV_LOAD_IMAGE_UNCHANGED);

double tframe = vTimestamps[ni];

2.3.2 检查图片是否加载成功

if(imRGB.empty())

{

cerr << endl << "Failed to load image at: "

<< string(argv[3]) << "/" << vstrImageFilenamesRGB[ni] << endl;

return 1;

}

2.3.3 (重点关注)在一个while循环里面遍历数据集里面的每一幅图片,并将深度图imD,RGB图imRGB逐个发送到SLAM系统之中,并可以获得当前帧的位姿矩阵Tcw

Camera_Pose = SLAM.TrackRGBD(imRGB,imD,tframe);

2.3.4 记录每一帧图像处理的时间

double orbTimeTmp=SLAM.mpTracker->orbExtractTime;

double movingTimeTmp=SLAM.mpTracker->movingDetectTime;

segmentationTime=SLAM.mpSegment->mSegmentTime;

2.3.5 (重点关注)通过ROS将位姿矩阵T发送到前面的3个Topic

Pub_CamPose(Camera_Pose);

void Pub_CamPose(cv::Mat &pose)

这里的位姿矩阵时一个4*4的矩阵,里面包含了平移和旋转的信息.

//定义了一个TF广播,用于之后将位姿信息广播到TF树:

orb_slam_broadcaster = new tf::TransformBroadcaster;

if(pose.dims<2 || pose.rows < 3) //如果位姿的维度或行数不符合,就跳过本次广播

{

Rwc = Rwc;

twc = twc;

}

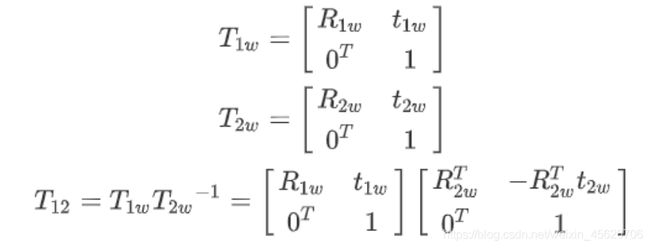

// 如果满足条件,就取出T矩阵里面的旋转矩阵R和平移向量t,并将旋转矩阵R转化为四元数Q,

Rwc = pose.rowRange(0,3).colRange(0,3).t();

twc = -Rwc*pose.rowRange(0,3).col(3);

rotationMat << …

//将旋转矩阵R 转化为四元数 Q

Eigen::Quaterniond Q(rotationMat);

- 此处twc =-Rwc*pose.rowRange(0,3).col(3);是因为在求位姿的逆.即系统像发布的是相机坐标系到世界坐标系的变换矩阵Twc

// 将位姿信息放到tf::Transform orb_slam中里面(为了最终传播给ros)

Pose_quat[0] = Q.x(); Pose_quat[1] = Q.y();

Pose_quat[2] = Q.z(); Pose_quat[3] = Q.w();

Pose_trans[0] = twc.at(0);

Pose_trans[1] = twc.at(1);

Pose_trans[2] = twc.at(2);

orb_slam.setOrigin(tf::Vector3(Pose_trans[2], -Pose_trans[0], -Pose_trans[1]));

orb_slam.setRotation(tf::Quaternion(Q.z(), -Q.x(), -Q.y(), Q.w()));

将这个变换矩阵广播到TF树上面去:

orb_slam_broadcaster->sendTransform(tf::StampedTransform(orb_slam, // 存储的是变换矩阵

ros::Time::now(), // 时间戳

"/map", // 父坐标系

"/base_link")); //子坐标系

- 我们不仅要把SLAM计算的位姿矩阵广播到TF树上面去,还要把这个位姿矩阵发布到topic上面去,因此我们需要将Transform的数据类型转换为ros的msg消息类型

//赋予geometry_msgs::PoseStamped的时间戳,frame_id

Cam_Pose.header.stamp = ros::Time::now();

Cam_Pose.header.frame_id = “/map”;

// 分别将点(位移),和四元数转换到msg格式

tf::pointTFToMsg(orb_slam.getOrigin(), Cam_Pose.pose.position);

tf::quaternionTFToMsg(orb_slam.getRotation(), Cam_Pose.pose.orientation);

同理对geometry_msgs::PoseWithCovarianceStamped类型的Cam_odom也进行一样的操作,不同的是geometry_msgs::PoseWithCovarianceStamped需要定义一个位姿的协方差

Cam_odom.pose.covariance = {0.01, 0, 0, 0, 0, 0,

0, 0.01, 0, 0, 0, 0,

0, 0, 0.01, 0, 0, 0,

0, 0, 0, 0.01, 0, 0,

0, 0, 0, 0, 0.01, 0,

0, 0, 0, 0, 0, 0.01};

//发布消息

CamPose_Pub.publish(Cam_Pose);

Camodom_Pub.publish(Cam_odom);

同理对nav_msgs::Odometry类型的odom也进行一样的操作,不同的是nav_msgs::Odometry需要定义三个方向的速度

double dt = (current_time - last_time).toSec();

double vx = (Cam_odom.pose.pose.position.x - lastx)/dt;

double vy = (Cam_odom.pose.pose.position.y - lasty)/dt;

double vth = (Cam_odom.pose.pose.orientation.z - lastth)/dt;

odom.twist.twist.linear.x = vx;

odom.twist.twist.linear.y = vy;

odom.twist.twist.angular.z = vth;

更新位置和时间,用于下一次计算nav_msgs::Odometry三个方向的速度

last_time = current_time;

lastx = Cam_odom.pose.pose.position.x;

lasty = Cam_odom.pose.pose.position.y;

lastth = Cam_odom.pose.pose.orientation.z;

step 3 如果所有的图像都预测完了,那么终止当前的SLAM系统

SLAM.Shutdown();

step 4 计算平均耗时

cout << "median tracking time: " << vTimesTrack[nImages/2] << endl;

cout << "mean tracking time: " << totaltime/nImages << endl;

cout << "mean orb extract time =" << orbTotalTime/nImages << endl;

cout << "mean moving detection time =" << movingTotalTime/nImages<< endl;

cout << "mean segmentation time =" << segmentationTime/nImages<< endl;

step 5 保存TUM格式的相机轨迹

SLAM.SaveTrajectoryTUM("/home/z/catkin_ws/src/DS-SLAM/result/CameraTrajectory.txt");

SLAM.SaveKeyFrameTrajectoryTUM("/home/z/catkin_ws/src/DS-SLAM/result/KeyFrameTrajectory.txt");

参考文献

https://blog.csdn.net/qq_41623632/article/details/112911046?spm=1001.2014.3001.5501

https://zhuanlan.zhihu.com/p/152063872?utm_source=wechat_timeline