数据挖掘第九周周报

- 数据挖掘第九周周报

- 1、本周工作主要是进行在数据集的基础上进行模型融合与参数设置,经过处理以后得到了base_info.csv和entprise_info.csv的merge数据集,

- 2、首先我先按照了训练赛的模型去训练我的数据集,但是效果不是很明显,可能是参数的问题,于是我放弃使用了学习赛的模型,转而百度了LGBM模型单独进行训练,然后是试着手动调节参数,根据手动调节的参数进行提交结果,虽然在自己本地有0.8443的结果,但是线上只有0.76:

3、对于线上线下差别这么大,我感觉应该是过拟合了,于是乎我又回过头去看参数的设置,请教别人调参的技巧,于是我又尝试了bayes调参:

def LGB_bayesian(

num_leaves, # int

min_data_in_leaf, # int

learning_rate,

min_sum_hessian_in_leaf, # int

feature_fraction,

lambda_l1,

lambda_l2,

min_gain_to_split,

max_depth):

# LightGBM expects next three parameters need to be integer. So we make them integer

num_leaves = int(num_leaves)

min_data_in_leaf = int(min_data_in_leaf)

max_depth = int(max_depth)

assert type(num_leaves) == int

assert type(min_data_in_leaf) == int

assert type(max_depth) == int

param = {

'num_leaves': num_leaves,

'min_data_in_leaf': min_data_in_leaf,

'learning_rate': learning_rate,

'min_sum_hessian_in_leaf': min_sum_hessian_in_leaf,

'feature_fraction': feature_fraction,

'lambda_l1': lambda_l1,

'lambda_l2': lambda_l2,

'min_gain_to_split': min_gain_to_split,

'max_depth': max_depth,

'save_binary': True,

'max_bin': 63,

'bagging_fraction': 0.4,

'bagging_freq': 5,

'seed': 2019,

# 'feature_fraction_seed': 2019,

# 'bagging_seed': 2019,

# 'drop_seed': 2019,

# 'data_random_seed': 2019,

'objective': 'binary',

'boosting_type': 'gbdt',

'verbose': -1,

'metric': 'auc',

#"tree_learner": "serial",

# 'is_unbalance': True,

# 'boost_from_average': False,

}

lgtrain = lgb.Dataset(X_train, label=y_train)

lgval = lgb.Dataset(X_test, label=y_test)

model = lgb.train(param, lgtrain, 20000, valid_sets=[lgval], early_stopping_rounds=100, verbose_eval=3000)

pred_val_y = model.predict(X_test, num_iteration=model.best_iteration)

score=metrics.roc_auc_score(y_test, pred_val_y)

return score

bounds_LGB = {

'num_leaves': (5, 20),

'min_data_in_leaf': (5, 100),

'learning_rate': (0.005, 0.3),

'min_sum_hessian_in_leaf': (0.00001, 20),

'feature_fraction': (0.001, 0.5),

'lambda_l1': (0, 10),

'lambda_l2': (0, 10),

'min_gain_to_split': (0, 1.0),

'max_depth':(3,200),

}

from bayes_opt import BayesianOptimization

LGB_BO = BayesianOptimization(LGB_bayesian, bounds_LGB, random_state=2019)

init_points = 5

n_iter = 200

但是不知道为什么这个玩意花里胡哨的却没有什么效果,得到的结果还是没有什么提升,于是我怀疑是不是自己的数据集没有处理好,我找到了一个baseline,看了看别人对于数据的处理,我发现大家的数据其实处理的也差不多,就是对数据缺失值多的去除,对象型数据转化为数据类型,甚至整个数据集处理的非常简单,但是他们的lgbm效果就是挺好。

4、后面我又百度了LGBM的调参,主要对以下几个参数进行设置:

parameters = {

'max_depth': [15, 20, 25, 30, 35],

'learning_rate': [0.01, 0.02, 0.05, 0.1, 0.15],

'feature_fraction': [0.6, 0.7, 0.8, 0.9, 0.95],

'bagging_fraction': [0.6, 0.7, 0.8, 0.9, 0.95],

'bagging_freq': [2, 4, 5, 6, 8],

'lambda_l1': [0, 0.1, 0.4, 0.5, 0.6],

'lambda_l2': [0, 10, 15, 35, 40],

'cat_smooth': [1, 10, 15, 20, 35]

}

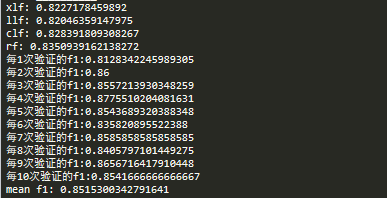

得到的结果还行:

5、最后我还尝试了将几个模型融合在一起,采用加权取值的方法进行预估*(部分代码):

for train, test in sk.split(train_data, kind):

x_train = train_data.iloc[train]

y_train = kind.iloc[train]

x_test = train_data.iloc[test]

y_test = kind.iloc[test]

xlf.fit(x_train, y_train)

pred_xgb = xlf.predict(x_test)

weight_xgb = eval_score(y_test,pred_xgb)['f1']

llf.fit(x_train, y_train)

pred_llf = llf.predict(x_test)

weight_lgb = eval_score(y_test,pred_llf)['f1']

clf.fit(x_train, y_train)

pred_cab = clf.predict(x_test)

weight_cab = eval_score(y_test,pred_cab)['f1']

rf.fit(x_train, y_train)

pred_rf = rf.predict(x_test)

weight_rf = eval_score(y_test,pred_rf)['f1']

prob_xgb = xlf.predict_proba(x_test)

prob_lgb = llf.predict_proba(x_test)

prob_cab = clf.predict_proba(x_test)

prob_rf = rf.predict_proba(x_test)

scores = []

ijkl = []

weight = np.arange(0, 1.05, 0.1)

for i, item1 in enumerate(weight):

for j, item2 in enumerate(weight[weight <= (1 - item1)]):

for k, item3 in enumerate(weight[weight <= (1 - item1-item2)]):

prob_end = prob_xgb * item1 + prob_lgb * item2 + prob_cab *item3+prob_rf*(1 - item1 - item2-item3)

#prob_end = np.sqrt(prob_xgb**2 * item1 + prob_lgb**2 * item2 + prob_cab**2 *item3+prob_rf**2*(1 - item1 - item2-item3))

score = eval_score(y_test,np.argmax(prob_end,axis=1))['f1']

scores.append(score)

ijkl.append((item1, item2,item3, 1 - item1 - item2-item3))

6、遇到的问题是线上线下预估的差别挺大的,可能还是测试集和验证集的差别导致的,线上比较好的参赛队伍里面他们也没有用到多少厉害的模型,别人的讨论更多是对数据集的处理,在和群里人员聊天的时候,也学到不少的知识,比如有些比赛有数据泄露什么的问题,请教过拟合的处理什么的。