ORB-SLAM2:(三)仿真实现&kinect2在线定位建图

目录

- 前言

- 仿真实现

- kinect2在线定位建图

- 总结

前言

这是运行ORB-SLAM2代码系列文章的第三篇,主要完成ORB-SLAM2在仿真环境中及基于kinect2在线定位建图。默认已经完成源码下载、编译和相关环境的配置,环境配置具体可参考:ORB-SLAM2:环境配置&源码编译。

仿真实现

这里使用的是由北京中科重德智能科技有限公司提供的机器人仿真环境,包含了XBot机器人和中科院软件博物馆仿真环境,同时也提供好了使用ORB_SLAM2的launch文件,执行起来比较方便。首先从github上下载:

- 下载环境

cd ~/catkin_ws/src

git clone https://github.com/DroidAITech/ROS-Academy-for-Beginners.git

- 安装依赖项

参照讲义安装依赖项,否则编译可能会出问题。

cd ~/catkin_ws

rosdep install --from-paths src --ignore-src --rosdistro=kinetic -y

更新Gazebo,确保Gazebo版本在7.0以上,讲义中推荐是7.9,直接升级即可。

// gazebo -v # 查看gazebo版本

sudo sh -c 'echo "deb http://packages.osrfoundation.org/gazebo/ubuntu-stable `lsb_release -cs` main" > /etc/apt/sources.list.d/gazebo-stable.list'

wget http://packages.osrfoundation.org/gazebo.key -O - | sudo apt-key add -

sudo apt-get update

sudo apt-get install gazebo7

- 修改话题名

上述操作配置好了仿真环境,接下来需要对ORB-SLAM2源码进行修改,保证仿真环境发布的话题名和算法订阅的话题名相同。首先定位到如下文件:

~/catkin_ws/src/ORB_SLAM2/Examples/ROS/ORB_SLAM2/src/ros_rgbd.cc

修改话题名:(depth_registered --> depth)

message_filters::Subscriber<sensor_msgs::Image> rgb_sub(nh, "/camera/rgb/image_raw", 1); // 不用修改

// 原:message_filters::Subscriber depth_sub(nh, "camera/depth_registered/image_raw/image_raw", 1);

message_filters::Subscriber<sensor_msgs::Image> depth_sub(nh, "camera/depth/image_raw", 1)

- 重新编译ORB-SLAM2

因为使用的是ROS环境下的RGB-D方式,所以只./build_ros.sh即可:

chmod +x build_ros.sh

./build_ros.sh

- 运行

分别在不同终端运行如下launch文件,即可在仿真环境下运行ORB-SLAM2

roslaunch robot_sim_demo robot_spawn.launch // 启动仿真环境

roslaunch orbslam2_demo ros_orbslam2.launch // 运行ORB-SLAM2算法

rosrun robot_sim_demo robot_keyboard_teleop.py // 键盘控制节点,通过按键控制机器人移动:i , j l(前后左右)

- 结果

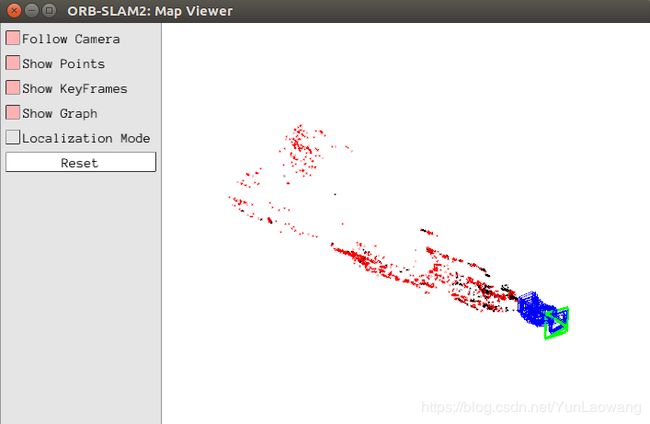

程序运行后可看到如下图所示的初始状态,通过控制小车移动即可完成三维稀疏点云的重建和相机位姿的定位。

- 可能出现的问题

如果出现如下错误,

[ERROR] [1527494740.144219702, 307.635000000]: GazeboRosControlPlugin missing <legacyModeNS> while using DefaultRobotHWSim, defaults to true.

This setting assumes you have an old package with an old implementation of DefaultRobotHWSim, where the robotNamespace is disregarded and absolute paths are used instead.

If you do not want to fix this issue in an old package just set <legacyModeNS> to true.

解决方法:

定位到如下.gazebo文件,添加下图中高亮语句即可,(问题原因)。

~/catkin_ws/src/ROS-Academy-for-Beginners-master/robot_sim_demo/urdf/xbot-u.gazebo

kinect2在线定位建图

使用kinect2在线定位与建图的流程,与在仿真环境模拟的过程大同小异,ORB-SLAM2算法主体并不变,区别只是通过修改其订阅的话题名获得不同的RGB-D图片进行处理,得出结果。

- 修改源码

kinect发布的topic有三种:hd、qhd、sd,三者的分辨率分别为19201080、960540、480*270,这里采用的是qhd分辨率,hd分辨率会导致图片过大,sd分辨率则精度不够、效果不好。

首先定位到如下文件:

~/catkin_ws/src/ORB_SLAM2/Examples/ROS/ORB_SLAM2/src/ros_rgbd.cc

修改订阅话题名:

// 修改为如下话题名

message_filters::Subscriber<sensor_msgs::Image> rgb_sub(nh, "/kinect2/qhd/image_color_rect", 1);

message_filters::Subscriber<sensor_msgs::Image> depth_sub(nh, "/kinect2/qhd/image_depth_rect", 1);

- 重新编译ORB-SLAM2

chmod +x build_ros.sh

./build_ros.sh

- 标定kinect2相机

在使用kinect相机之前,需要对其进行标定,将彩色图像与深度图像对齐,保证两者之间的一一对应关系,就和前面使用RGB-D数据集运行时生成associations.txt文件的目的一样。

kinect2的标定可使用iai_kinect2提供的kinect2_calibration模块进行,具体的标定流程可参考:链接。标定好之后的结果,会自动保存成.yaml文件格式,在使用Kinect采集图像时,kinect2_bridge会自动检测标定文件并校正图像。

- 编写yaml文件

在运行ORB-SLAM2之前需要仿照TUM1.yaml文件格式,将kinect2相机参数编写成kinect2_qhd.yaml,为算法提供必要的参数。(这里使用的是iai_kinect2/kinect2_bridge/data/196605135147/calib_color.yaml中的参数)

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 529.97 # 此四个参数为相机标定结果的一半

Camera.fy: 526.97

Camera.cx: 477.44

Camera.cy: 261.87

Camera.k1: 0.05627

Camera.k2: -0.0742

Camera.p1: 0.00143

Camera.p2: -0.00170

Camera.k3: 0.0241

Camera.width: 960

Camera.height: 540

# Camera frames per second

Camera.fps: 30.0

# IR projector baseline times fx (aprox.)

Camera.bf: 40.0

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Close/Far threshold. Baseline times.

ThDepth: 50.0

# Deptmap values factor

DepthMapFactor: 1000.0

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1000

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

- 运行

启动kinect2:

roslaunch kinect2_bridge kinect2_bridge.launch

// rosrun kinect2_viewer kinect2_viewer // 打开新终端,执行该命令可显示当前kinect2图像

在另一终端运行ORB-SLAM2:

cd ~/catkin_ws/src/ORB_SLAM2

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.txt Examples/ROS/ORB_SLAM2/kinect2_qhd.yaml

- 结果

运行结果如下图所示,这是直接打开kinect2重建出来的场景,并未移动重建。

- 可能出现的错误

[ INFO] [1561086958.121575646]: Loading nodelet /kinect2_bridge of type kinect2_bridge/kinect2_bridge_nodelet to manager kinect2 with the following remappings:

process[kinect2_points_xyzrgb_hd-6]: started with pid [5927]

[ INFO] [1561086958.130041291]: waitForService: Service [/kinect2/load_nodelet] has not been advertised, waiting...

[ INFO] [1561086958.210775922]: Initializing nodelet with 4 worker threads.

[ INFO] [1561086958.216207215]: waitForService: Service [/kinect2/load_nodelet] is now available.

[ERROR] [1561086958.332887523]: Failed to load nodelet [/kinect2_bridge] of type [kinect2_bridge/kinect2_bridge_nodelet] even after refreshing the cache: Could not find library corresponding to plugin kinect2_bridge/kinect2_bridge_nodelet. Make sure the plugin description XML file has the correct name of the library and that the library actually exists.

[ERROR] [1561086958.332935107]: The error before refreshing the cache was: Could not find library corresponding to plugin kinect2_bridge/kinect2_bridge_nodelet. Make sure the plugin description XML file has the correct name of the library and that the library actually exists.

[FATAL] [1561086958.333112630]: Failed to load nodelet '/kinect2_bridge` of type `kinect2_bridge/kinect2_bridge_nodelet` to manager `kinect2'

[kinect2_bridge-3] process has died [pid 5915, exit code 255, cmd /opt/ros/kinetic/lib/nodelet/nodelet load kinect2_bridge/kinect2_bridge_nodelet kinect2 __name:=kinect2_bridge __log:=/home/wyh/.ros/log/e2a95946-93d2-11e9-8538-40f02f100a1c/kinect2_bridge-3.log].

log file: /home/wyh/.ros/log/e2a95946-93d2-11e9-8538-40f02f100a1c/kinect2_bridge-3*.log

原因:

之前使用了单独编译某个包的命令:catkin_make -DCATKIN_WHITELIST_PACKAGES="package_name",然后按照正常的顺序安装编译,但是由于编译单个包的命令仍然起作用,导致iai_kinect2没有完全编译,于是就出现了这个问题。

解决方法:

使用catkin_make -DCATKIN_WHITELIST_PACKAGES=""编译工作空间内的所有包,然后再执行iai_kinect2的编译:catkin_make -DCMAKE_BUILD_TYPE="Release"即可。

总结

ORB-SLAM2算法流程清晰、代码简洁易读,包含了常见SLAM系统的所有模块:跟踪(Tracking)、局部建图(LocalMapping)、闭环检测(Loop closing);同时支持单目、双目(标定后)、RGB-D方式,即使对于剧烈运动也有很好的鲁棒性,是基于特征点的SLAM系统的经典算法,十分值得学习和借鉴。但是同样有一些局限之处:

-

无法用于导航和避障

ORB-SLAM2算法基于特征点法,构建的地图是稀疏的三维点云,而想要用于导航和避障任务,则需要重建稠密地图才行,因此ORB-SLAM2主要应用于定位和稀疏重建,而无法应用于导航和避障场景。 -

无法保存和加载地图

ORB_SLAM2源码中没有提供保存和加载地图功能,定位和建图也只能实时在线运行,即使是重定位也是在线的,无法离线保存、加载地图。