python数据分析实践(一)

记第一次运用Python对JSON格式文件进行数据处理

关于导入json文件的问题

- 导入数据

import json

fp = open('abcd.json') # 打开文件

file_data = fp.readlines() # 按行读,导入数据

fp.close() # 关闭文件

考虑不要按行读取数据,而是运用json自带的load()函数,对文件进行整体处理。

import json

with open("abcd.json",'r') as load_f:

load_dict = json.load(load_f)

但是出现报错

json.decoder.JSONDecodeError: Extra data: line 2 column 1 (char 1243)

- 原因分析

In the json lib ->decoder.py->decode function

if end != len(s):

raise ValueError(errmsg("Extra data", s, end, len(s)))

解码json文件时,在文件本该结束的地方,文件却没有结束,便会报extra data的错误。对于我所解码的“abcd.json”文件,每一行是一个json格式的str数据,但是整体看的时候,它就不再是一个str,而是多个str的集合。load()函数解码完第一行看到"}",发现文件应该结束了,结果发现还有第二行,所有报错extra data.

- 小结

导入数据时,根据数据的格式采取相应的办法。这就意味着在处理数据之前,我们需要完全地了解我们所要处理的数据。

利用pandas的dataframe进行数据重建

- pandas.dataframe官方指南

http://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.html

class pandas.DataFrame(data=None, index=None, columns=None, dtype=None, copy=False)

Two-dimensional size-mutable, potentially heterogeneous tabular data structure with labeled axes (rows and columns). Arithmetic operations align on both row and column labels. Can be thought of as a dict-like container for Series objects. The primary pandas data structure.

dataframe是一种二维的大小可变,成分混杂的列表数据结构,并且每个维度都带有标签(行和列),可以对行列进行算术运算的操作。dataframe可以被认为是一种用于容纳Series object的类似字典数据类型的容器,它是pandas最基本和主要的数据结构。

parameters:

data : numpy ndarray (structured or homogeneous), dict, or DataFrame

Dict can contain Series, arrays, constants, or list-like objects

index: Index or array-like

Index to use for resulting frame. Will default to np.arange(n) if no indexing information part of input data and no index provided

columns : Index or array-like

Column labels to use for resulting frame. Will default to np.arange(n) if no column labels are provided

dtype : dtype, default None

Data type to force, otherwise infer

copy : boolean, default False

Copy data from inputs. Only affects DataFrame / 2d ndarray input

至此是对pandas.dataframe官方指南的抄录和翻译。

- json原数据的整理和重建

import pandas as pd

def is_json(data): # 判断是否为 json 格式

try:

json.loads(data)

except ValueError:

return False

return True

# 建立一个空的 dataframe

df = pd.DataFrame(columns = ['Session','qhBegin','qhEnd','qhId','k1','k2','k3','k4','k5','k6','m2'])

# index = 1

for i in range(len(file_data)):

if is_json(file_data[i]):

json_data = json.loads(file_data[i]) # 变换数据类型,字符串变成字典

if 'event' in list(json_data.keys()):

event = json_data.get('event') # 取key为event的value

key_value = list(event.keys()) # 取出event字典里的keys,并转换为list

Se = key_value[0] # 取出Session号

event_data = event.get(Se) # 取出event数据

dev_num = json_data.get('header').get('m2') #取出设备号

for j in range(len(event_data)):

undata = event_data[j]

# 取出event_data里的所有数据

# qhBegin

if 'qhBegin' in list(undata.keys()):

qhBegin = undata.get('qhBegin')

else:

qhBegin = ''

# qhEnd

if 'qhEnd' in list(undata.keys()):

qhEnd = undata.get('qhEnd')

else:

qhEnd = ''

# qhId

if 'qhId' in list(undata.keys()):

qhId = undata.get('qhId')

else:

qhId = ''

# k1

if 'k1' in list(undata.keys()):

k1 = undata.get('k1')

else:

k1 = ''

# k2

if 'k2' in list(undata.keys()):

k2 = undata.get('k2')

else:

k2 = ''

# k3

if 'k3' in list(undata.keys()):

k3 = undata.get('k3')

else:

k3 = ''

# k4

if 'k4' in list(undata.keys()):

k4 = undata.get('k4')

else:

k4 = ''

# k5

if 'k5' in list(undata.keys()):

k5 = undata.get('k5')

else:

k5 = ''

# k6

if 'k6' in list(undata.keys()):

k6 = undata.get('k6')

else:

k6 = ''

# 将event数据导入进dataframe

# 方法1

# df.loc[index] = [Se, qhBegin, qhEnd, qhId, k1, k2, k3, k4, k5, k6, dev_num]

# index = index + 1

# 方法2

# df1 = pd.DataFrame([[Se, qhBegin, qhEnd, qhId, k1, k2, k3, k4, k5, k6, dev_num]],

# columns=['Session','qhBegin','qhEnd','qhId','k1','k2','k3','k4','k5','k6','m2'])

# df = df.append(df1)

# 方法3

df1 = pd.DataFrame([[Se, qhBegin, qhEnd, qhId, k1, k2, k3, k4, k5, k6, dev_num]],

columns=['Session','qhBegin','qhEnd','qhId','k1','k2','k3','k4','k5','k6','m2'])

df = pd.concat([df,df1],ignore_index=True)

- 小结

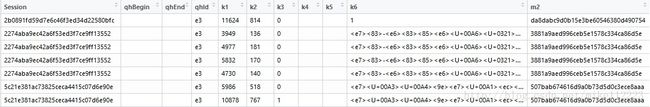

以上代码为对数据基于pandas的dataframe进行重建,因为原本的json文件中的以event为key的数据并非每个column都有,比如有的数据有qhBegin, 有的数据没有;有的数据有k1,有的数据没有。因此,依次判断每个数据中的每个column,如果没有就赋值为空。下图为数据框建立后的大概样子。

在将event数据导入到dataframe里时,试用了三种方法,发现方法3,运用pd.concat()速度最快,为了节约时间所以使用concat(),ignore_index表示是否忽视原本数据所带的index,并从0开始计数。loc()主要用于数据的修改和定位。

数据去重

在用户上传数据的过程中,经常会出现数据重传的问题,导致服务器中存在完全相同的数据,在重建完数据之后和进行数据处理之前就需要进行数据去重。

- pandas.dataframe.drop_duplicates官方指南

http://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.drop_duplicates.html

DataFrame.drop_duplicates(subset=None, keep=’first’, inplace=False)

Return DataFrame with duplicate rows removed, optionally only considering certain columns.

parameters:

subset : column label or sequence of labels, optional

Only consider certain columns for identifying duplicates, by default use all of the columns

keep: {‘first’, ‘last’, False}, default ‘first’

- first : Drop duplicates except for the first occurrence.

- last : Drop duplicates except for the last occurrence.

- False : Drop all duplicates.

inplace : boolean, default False

Whether to drop duplicates in place or to return a copy

return:

deduplicated : DataFrame

至此是对pandas.dataframe官方指南的抄录。

# 删除完全重复的数据

df = df.drop_duplicates()

# 所有用户的数量

n_user = df.drop_duplicates(['m2']).__len__()

# 选取符合条件的数据并统计数量

df_t_e1 = df[(df.qhId=='e1')]

df_e1 = df_t_e1.drop_duplicates(['m2'],keep='last') # 以设备号 m2 进行去重并取后面的数据

df_p_e1 = df_e1[(df_e1.qhEnd!='')] # 通过新手引导

# 统计数据框的长度

e1_count = df_e1.__len__()

e1_p_count = df_p_e1.__len__()

# 计算通过率

e1_p_percent = e1_p_count/e1_count

- 小结

上述代码是我代码段的其中一段体现了一些关于去重的思想,如果我们直接使用drop_duplicates(),那么我们去掉的是完全重复的数据,对于我的数据来说,去掉的数据一定与我保留的数据中的某一行所有column都相同,那么可想而知这是一定要去除的,不然就会重复计算。如果我们想只针对一个column或者几个column进行去重,那么我们就可以使用drop_duplicates([‘column1’,‘column2’,…])。最后一点也是比较重要的一点就是我们要保留哪一个,是第一个还是最后一个,还是所有重复的都不要。那么这个时候就要根据实际情况进行去重。 keep=‘first’,保留第一个;keep=‘last’,保留最后一个;keep=‘False’,都不保留。

数据画图

- 饼状图

# 新手任务通过率画图

plt.figure(1,figsize=(12,6))

plt.title('新手指导通过率')

labels = [u'通过新手指导',u'未通过新手指导']

sizes = [100*e1_p_percent,100-100*e1_p_percent]

colors = ['lavender','gold']

explode = [0.1,0]

patches,l_text,p_text = plt.pie(sizes,explode=explode,labels=labels,colors=colors,

labeldistance = 1.5,autopct = '%3.1f%%',shadow = True,

startangle = 0,pctdistance = 1.3)

# labeldistance,文本的位置离远点有多远,1.1指1.1倍半径的位置

# autopct,圆里面的文本格式,%3.1f%%表示小数有三位,整数有一位的浮点数

# shadow,饼是否有阴影

# startangle,起始角度,0,表示从0开始逆时针转,为第一块。一般选择从90度开始比较好看

# pctdistance,百分比的text离圆心的距离

# patches, l_texts, p_texts,为了得到饼图的返回值,p_texts饼图内部文本的,l_texts饼图外label的文本

# 改变文本的大小

# 方法是把每一个text遍历。调用set_size方法设置它的属性

for t in l_text:

t.set_size(9)

for t in p_text:

t.set_size(9)

# 设置x,y轴刻度一致,这样饼图才能是圆的

plt.axis('equal')

plt.legend()

plt.draw()

plt.savefig('新手引导.jpg')

饼状图的细节都在代码里了,如果出现无法保存为’jpg’的情况,说明你需要pip install pillow。

- 柱状图

# 选取符合条件的数据并统计数量

df_e3_k1 = df[(df.qhId=='e3')]

df_e3_k1_1 = df_e3_k1[(df_e3_k1.k6=='1')] # 热情海岛

df_e3_k1_2 = df_e3_k1[(df_e3_k1.k6=='2')] # 神秘东方

df_e3_k1_3 = df_e3_k1[(df_e3_k1.k6=='3')] # 货运码头

df_e3_k1_4 = df_e3_k1[(df_e3_k1.k6=='4')] # 沙漠风暴

df_e3_k1_1_temp = df_e3_k1_1['k1'].astype('int').copy()

df_e3_k1_2_temp = df_e3_k1_2['k1'].astype('int').copy()

df_e3_k1_3_temp = df_e3_k1_3['k1'].astype('int').copy()

df_e3_k1_4_temp = df_e3_k1_4['k1'].astype('int').copy()

# 统计热情海岛里程

e3_k1_1_b4_count = df_e3_k1_1_temp[df_e3_k1_1_temp<=4000].__len__()

e3_k1_1_f4t8_count = df_e3_k1_1_temp[(df_e3_k1_1_temp>4000) & (df_e3_k1_1_temp<=8000)].__len__()

e3_k1_1_f8t12_count = df_e3_k1_1_temp[(df_e3_k1_1_temp>8000) & (df_e3_k1_1_temp<=12000)].__len__()

e3_k1_1_a12_count = df_e3_k1_1_temp[df_e3_k1_1_temp>12000].__len__()

e3_k1_1 = np.array([e3_k1_1_b4_count, e3_k1_1_f4t8_count, e3_k1_1_f8t12_count, e3_k1_1_a12_count])

# 统计神秘东方里程

e3_k1_2_b4_count = df_e3_k1_2_temp[df_e3_k1_2_temp<=4000].__len__()

e3_k1_2_f4t8_count = df_e3_k1_2_temp[(df_e3_k1_2_temp>4000) & (df_e3_k1_2_temp<=8000)].__len__()

e3_k1_2_f8t12_count = df_e3_k1_2_temp[(df_e3_k1_2_temp>8000) & (df_e3_k1_2_temp<=12000)].__len__()

e3_k1_2_a12_count = df_e3_k1_2_temp[df_e3_k1_2_temp>12000].__len__()

e3_k1_2 = np.array([e3_k1_2_b4_count, e3_k1_2_f4t8_count, e3_k1_2_f8t12_count, e3_k1_2_a12_count])

# 统计货运码头里程

e3_k1_3_b4_count = df_e3_k1_3_temp[df_e3_k1_3_temp<=4000].__len__()

e3_k1_3_f4t8_count = df_e3_k1_3_temp[(df_e3_k1_3_temp>4000) & (df_e3_k1_3_temp<=8000)].__len__()

e3_k1_3_f8t12_count = df_e3_k1_3_temp[(df_e3_k1_3_temp>8000) & (df_e3_k1_3_temp<=12000)].__len__()

e3_k1_3_a12_count = df_e3_k1_3_temp[df_e3_k1_3_temp>12000].__len__()

e3_k1_3 = np.array([e3_k1_3_b4_count, e3_k1_3_f4t8_count, e3_k1_3_f8t12_count, e3_k1_3_a12_count])

# 统计沙漠风暴里程

e3_k1_4_b4_count = df_e3_k1_4_temp[df_e3_k1_4_temp<=4000].__len__()

e3_k1_4_f4t8_count = df_e3_k1_4_temp[(df_e3_k1_4_temp>4000) & (df_e3_k1_4_temp<=8000)].__len__()

e3_k1_4_f8t12_count = df_e3_k1_4_temp[(df_e3_k1_4_temp>8000) & (df_e3_k1_4_temp<=12000)].__len__()

e3_k1_4_a12_count = df_e3_k1_4_temp[df_e3_k1_4_temp>12000].__len__()

e3_k1_4 = np.array([e3_k1_4_b4_count, e3_k1_4_f4t8_count, e3_k1_4_f8t12_count, e3_k1_4_a12_count])

# 玩家完成里程画图

plt.figure(3,figsize=(12,6))

plt.title('玩家完成里程')

X = np.arange(0,10,2.5)

plt.bar(X,e3_k1_1,width = 0.5,facecolor = 'gold',edgecolor = 'white',label='热情海岛')

plt.bar(X+0.5,e3_k1_2,width = 0.5,facecolor = 'y',edgecolor = 'white',label='神秘东方')

plt.bar(X+1,e3_k1_3,width = 0.5,facecolor = 'yellowgreen',edgecolor = 'white',label='货运码头')

plt.bar(X+1.5,e3_k1_4,width = 0.5,facecolor = 'forestgreen',edgecolor = 'white',label='沙漠风暴')

# width:柱的宽度

# 水平柱状图plt.barh,属性中宽度width变成了高度height

# 打两组数据时用+

# facecolor柱状图里填充的颜色

# edgecolor是边框的颜色

# 想把一组数据打到下边,在数据前使用负号

# 给图加text

for x,y in zip(X,e3_k1_1):

plt.text(x, y+0.05, y, ha='center', va= 'bottom') # '%.2f' % y 保留小数后两位

for x,y in zip(X,e3_k1_2):

plt.text(x+0.5, y+0.05, y, ha='center', va= 'bottom')

for x,y in zip(X, e3_k1_3):

plt.text(x+1, y+0.05, y, ha='center', va='bottom')

for x,y in zip(X, e3_k1_4):

plt.text(x+1.5, y+0.05, y, ha='center', va='bottom')

# 设定y轴长度 plt.ylim(0,+

# 改变x轴刻度和显示文本

x = [0.75,3.25,5.75,8.25]

group_labels = ['4K以下','4K-8K','8K-12K','12K以上']

plt.xticks(x,group_labels,rotation=0)

plt.ylabel('次数',rotation=0)

plt.xlabel('分布区间')

plt.legend()

plt.draw()

plt.savefig('完成里程.jpg')

以上代码为数据提取以及画柱状图的细节。

一些小东西

# 添加中文字体

from pylab import *

mpl.rcParams['font.sans-serif'] = ['SimHei']

# 模仿matlab tic, toc

import time

def tic():

globals()['bt'] = time.clock()

def toc():

print ('所用时间为: %.2f seconds' % (time.clock()-globals()['bt']))

# 开启interactive mode

import matplotlib.pyplot as plt

plt.ion()

# plt.show() 会打断程序进行,为了计算程序运行时长,第一我们可以照上面将图片保存成jpg格式,第二我们可以运用plt.show(),不过需要添加plt.ion(),避免程序需要手动关掉图片才能继续运行。

最后附上别人写的关于python中matplotlib的颜色的博客链接

http://www.cnblogs.com/darkknightzh/p/6117528.html

参考网址

[1]: http://www.jianshu.com/p/0a76c94e9db7

[2]: http://www.th7.cn/Program/Python/201702/1098655.shtml

[3]: http://meta.math.stackexchange.com/questions/5020/mathjax-basic-tutorial-and-quick-reference