ORB-SLAM2源码阅读(1)

ORB-SLAM2源码阅读(1)

ORB-SLAM2 的代码以结构清晰,注释完整,易于理解著称,最好先阅读一下论文再来读代码,网上有很多大神已经翻译好了,个人推荐这位的ORB-SLAM2全文翻译

系统简介

ORB-SLAM系统同时运行三个线程:

1、Tracking线程

- 对于新读取的帧,提取ORB特征

- (系统初始化)

- 当前帧位姿初值估计(根据上一帧+motion-only BA,或进行重定位)

- 局部地图跟踪

对上一步得到的位姿初值进行进一步BA优化

局部地图:指Covisibility Graph中附近的KFs及其MapPoints所组成的局部的地图 - 决定是否将当前帧作为关键帧插入Map

2、Local Mapping线程

- 接收从Tracking线程插入的KF,并进行预处理

- 别除质量较差的MapPoints

- 通过三角化生成新的MapPoints

- 局部地图BA优化

- 剔除冗余的局部关键帧

3、Loop Closing线程

- 接收Local Mapping传来的筛选处理后的KF

- 检测出一批Candidate KFs

- 计算Sim3,确定最终的Loop KF

- 进行回环融合

- 优化Essential Graph

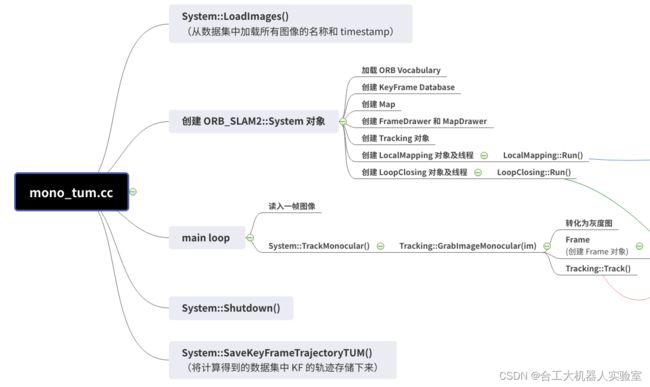

下面以 mono_tum.cc 程序为入口,该程序是官方提供的 example,其对 TUM 数据集中的视频序列进行 SLAM

根据该程序,可以清晰地看出该怎么调用整个 ORB-SLAM2 系统

mono_tum.cc 简析

详细注释的代码如下:

/**

* This file is part of ORB-SLAM2.

*

* Copyright (C) 2014-2016 Raúl Mur-Artal (University of Zaragoza)

* For more information see

*

* ORB-SLAM2 is free software: you can redistribute it and/or modify

* it under the terms of the GNU General Public License as published by

* the Free Software Foundation, either version 3 of the License, or

* (at your option) any later version.

*

* ORB-SLAM2 is distributed in the hope that it will be useful,

* but WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

* GNU General Public License for more details.

*

* You should have received a copy of the GNU General Public License

* along with ORB-SLAM2. If not, see

#include 根据代码可绘制如下程序框图:

其中LoadImages()函数和main loop需要自己编写

LoadImages()函数用于从数据集中加载图像,这很简单

而根据不同的相机类型调用不同的接口函数即可,有以下三种:

- TrackStereo( )

- TrackRGBD( )

- TrackMonocular( )

但调用函数接口的前提是创建一个ORB::System对象,先看函数声明

// Initialize the SLAM system. It launches the Local Mapping, Loop Closing and Viewer threads.

System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor, const bool bUseViewer = true);

主要传入参数有三个:

- ORB字典

- 参数配置文件

- 相机类型

其中参数配置文件包括相机内参、焦距、深度图尺度等等,相机类型自然也有三种

再看函数定义:

System::System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor,

const bool bUseViewer):mSensor(sensor), mpViewer(static_cast<Viewer*>(NULL)), mbReset(false),mbActivateLocalizationMode(false),

mbDeactivateLocalizationMode(false)

{

// Output welcome message

cout << endl <<

"ORB-SLAM2 Copyright (C) 2014-2016 Raul Mur-Artal, University of Zaragoza." << endl <<

"This program comes with ABSOLUTELY NO WARRANTY;" << endl <<

"This is free software, and you are welcome to redistribute it" << endl <<

"under certain conditions. See LICENSE.txt." << endl << endl;

cout << "Input sensor was set to: ";

if(mSensor==MONOCULAR)

cout << "Monocular" << endl;

else if(mSensor==STEREO)

cout << "Stereo" << endl;

else if(mSensor==RGBD)

cout << "RGB-D" << endl;

//Check settings file

cv::FileStorage fsSettings(strSettingsFile.c_str(), cv::FileStorage::READ);

if(!fsSettings.isOpened())

{

cerr << "Failed to open settings file at: " << strSettingsFile << endl;

exit(-1);

}

//Load ORB Vocabulary

cout << endl << "Loading ORB Vocabulary. This could take a while..." << endl;

mpVocabulary = new ORBVocabulary();

bool bVocLoad = mpVocabulary->loadFromTextFile(strVocFile);

if(!bVocLoad)

{

cerr << "Wrong path to vocabulary. " << endl;

cerr << "Falied to open at: " << strVocFile << endl;

exit(-1);

}

cout << "Vocabulary loaded!" << endl << endl;

//Create KeyFrame Database

mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary);

//Create the Map

mpMap = new Map();

//Create Drawers. These are used by the Viewer

mpFrameDrawer = new FrameDrawer(mpMap);

mpMapDrawer = new MapDrawer(mpMap, strSettingsFile);

//Initialize the Tracking thread

//(it will live in the main thread of execution, the one that called this constructor)

mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer,

mpMap, mpKeyFrameDatabase, strSettingsFile, mSensor);

//Initialize the Local Mapping thread and launch

mpLocalMapper = new LocalMapping(mpMap, mSensor==MONOCULAR);

mptLocalMapping = new thread(&ORB_SLAM2::LocalMapping::Run,mpLocalMapper);

//Initialize the Loop Closing thread and launch

mpLoopCloser = new LoopClosing(mpMap, mpKeyFrameDatabase, mpVocabulary, mSensor!=MONOCULAR);

mptLoopClosing = new thread(&ORB_SLAM2::LoopClosing::Run, mpLoopCloser);

//Initialize the Viewer thread and launch

if(bUseViewer)

{

mpViewer = new Viewer(this, mpFrameDrawer,mpMapDrawer,mpTracker,strSettingsFile);

mptViewer = new thread(&Viewer::Run, mpViewer);

mpTracker->SetViewer(mpViewer);

}

//Set pointers between threads

mpTracker->SetLocalMapper(mpLocalMapper);

mpTracker->SetLoopClosing(mpLoopCloser);

mpLocalMapper->SetTracker(mpTracker);

mpLocalMapper->SetLoopCloser(mpLoopCloser);

mpLoopCloser->SetTracker(mpTracker);

mpLoopCloser->SetLocalMapper(mpLocalMapper);

}

System.cc 为系统的入口,其负责创建各种对象,同时创建 Tracking,LocalMapping, LoopCLosing 三个线程并运行

main loop中的TrackMonocular( )是启动 Tracking 线程的入口

Tracking 线程为主线程,而 Local Mapping 和 Loop Closing 线程是通过new thread创建的

关注一下TrackMonocular( )的实现

cv::Mat System::TrackMonocular(const cv::Mat &im, const double ×tamp)

{

if(mSensor!=MONOCULAR)

{

cerr << "ERROR: you called TrackMonocular but input sensor was not set to Monocular." << endl;

exit(-1);

}

// Check mode change

{

unique_lock<mutex> lock(mMutexMode);

//纯定位模式,只有Tracking线程

if(mbActivateLocalizationMode)

{

mpLocalMapper->RequestStop();

// Wait until Local Mapping has effectively stopped

while(!mpLocalMapper->isStopped())

{

usleep(1000);

}

mpTracker->InformOnlyTracking(true);

mbActivateLocalizationMode = false;

}

//SLAM运行模式

if(mbDeactivateLocalizationMode)

{

mpTracker->InformOnlyTracking(false);

mpLocalMapper->Release();

mbDeactivateLocalizationMode = false;

}

}

// Check reset

{

unique_lock<mutex> lock(mMutexReset);

if(mbReset)

{

mpTracker->Reset();

mbReset = false;

}

}

//估计当前图像的相机位姿Tcw

cv::Mat Tcw = mpTracker->GrabImageMonocular(im,timestamp);

unique_lock<mutex> lock2(mMutexState);

mTrackingState = mpTracker->mState;

mTrackedMapPoints = mpTracker->mCurrentFrame.mvpMapPoints;

mTrackedKeyPointsUn = mpTracker->mCurrentFrame.mvKeysUn;

return Tcw;

}

发现其主要通过GrabImageMonocular( )函数估计了估计当前图像的相机位姿Tcw

再看GrabImageMonocular( )函数的实现

cv::Mat Tracking::GrabImageMonocular(const cv::Mat &im, const double ×tamp)

{

mImGray = im;

//将彩色图转化为灰度图

if(mImGray.channels()==3)

{

if(mbRGB)

cvtColor(mImGray,mImGray,CV_RGB2GRAY);

else

cvtColor(mImGray,mImGray,CV_BGR2GRAY);

}

else if(mImGray.channels()==4)

{

if(mbRGB)

cvtColor(mImGray,mImGray,CV_RGBA2GRAY);

else

cvtColor(mImGray,mImGray,CV_BGRA2GRAY);

}

//创建frame对象

if(mState==NOT_INITIALIZED || mState==NO_IMAGES_YET) //初始化的帧提取两倍特征点数

mCurrentFrame = Frame(mImGray,timestamp,mpIniORBextractor,mpORBVocabulary,mK,mDistCoef,mbf,mThDepth);

else

mCurrentFrame = Frame(mImGray,timestamp,mpORBextractorLeft,mpORBVocabulary,mK,mDistCoef,mbf,mThDepth);

//Tracking线程开始工作

Track();

return mCurrentFrame.mTcw.clone();

}

可以看到对输入图像预处理并创建frame对象后,直接调用Track( )函数,使得Tracking线程开始工作

Tracking线程作为主线程,非常重要,下面学习其具体实现

Tracking线程

void Tracking::Track()

{

if(mState==NO_IMAGES_YET)

{

mState = NOT_INITIALIZED;

}

mLastProcessedState=mState;

// Get Map Mutex -> Map cannot be changed

unique_lock<mutex> lock(mpMap->mMutexMapUpdate);

//初始化

if(mState==NOT_INITIALIZED)

{

if(mSensor==System::STEREO || mSensor==System::RGBD)

StereoInitialization();

else

MonocularInitialization();

mpFrameDrawer->Update(this);

if(mState!=OK)

return;

}

else

{

//初始化完成后估计当前帧位姿

//由motion model或Reference KF估计相机位姿,在Track Local Map中进一步优化

// System is initialized. Track Frame.

bool bOK;

// Initial camera pose estimation using motion model or relocalization (if tracking is lost)

if(!mbOnlyTracking) //SLAM模式

{

//Local Mapping默认是激活的

// Local Mapping is activated. This is the normal behaviour, unless

// you explicitly activate the "only tracking" mode.

if(mState==OK)

{

// Local Mapping might have changed some MapPoints tracked in last frame

CheckReplacedInLastFrame();

//若运动模型还未建立或刚刚进行了重定位

if(mVelocity.empty() || mCurrentFrame.mnId<mnLastRelocFrameId+2)

{

//根据Reference KF进行估计

bOK = TrackReferenceKeyFrame();

}

else //否则根据运动模型(速度不变模型)进行估计

{

bOK = TrackWithMotionModel();

if(!bOK) //若追踪失败,再根据Reference KF进行估计

bOK = TrackReferenceKeyFrame();

}

}

else //mState==Lost,需要重定位,将当前帧与KFDataBase中的KF匹配

{

bOK = Relocalization();

}

}

else

{

// Localization Mode: Local Mapping is deactivated

if(mState==LOST)

{

bOK = Relocalization();

}

else

{

if(!mbVO)

{

// In last frame we tracked enough MapPoints in the map

if(!mVelocity.empty())

{

bOK = TrackWithMotionModel();

}

else

{

bOK = TrackReferenceKeyFrame();

}

}

else

{

// In last frame we tracked mainly "visual odometry" points.

// We compute two camera poses, one from motion model and one doing relocalization.

// If relocalization is sucessfull we choose that solution, otherwise we retain

// the "visual odometry" solution.

bool bOKMM = false; //motion model

bool bOKReloc = false; //recolization

vector<MapPoint*> vpMPsMM;

vector<bool> vbOutMM;

cv::Mat TcwMM;

if(!mVelocity.empty())

{

bOKMM = TrackWithMotionModel();

vpMPsMM = mCurrentFrame.mvpMapPoints;

vbOutMM = mCurrentFrame.mvbOutlier;

TcwMM = mCurrentFrame.mTcw.clone();

}

bOKReloc = Relocalization();

if(bOKMM && !bOKReloc) //TrackWithMotionModel成功,Relocalization失败

{

mCurrentFrame.SetPose(TcwMM);

mCurrentFrame.mvpMapPoints = vpMPsMM;

mCurrentFrame.mvbOutlier = vbOutMM;

if(mbVO)

{

for(int i =0; i<mCurrentFrame.N; i++)

{

if(mCurrentFrame.mvpMapPoints[i] && !mCurrentFrame.mvbOutlier[i])

{

mCurrentFrame.mvpMapPoints[i]->IncreaseFound();

}

}

}

}

else if(bOKReloc)

{

mbVO = false;

}

bOK = bOKReloc || bOKMM; //TrackWithMotionModel和Relocalization哪个成功用哪个

}

}

}

mCurrentFrame.mpReferenceKF = mpReferenceKF;

//局部地图跟踪

// If we have an initial estimation of the camera pose and matching. Track the local map.

if(!mbOnlyTracking) //SLAM模式

{

if(bOK)

bOK = TrackLocalMap();

}

else //localization模式

{

// mbVO true means that there are few matches to MapPoints in the map. We cannot retrieve

// a local map and therefore we do not perform TrackLocalMap(). Once the system relocalizes

// the camera we will use the local map again.

if(bOK && !mbVO)

bOK = TrackLocalMap();

}

if(bOK)

mState = OK; //当前帧位姿估计+局部地图跟踪都成功才算成功

else

mState=LOST;

// Update drawer

mpFrameDrawer->Update(this);

//决定是否生成关键帧,并插入关键帧

// If tracking were good, check if we insert a keyframe

if(bOK)

{

// Update motion model

if(!mLastFrame.mTcw.empty())

{

cv::Mat LastTwc = cv::Mat::eye(4,4,CV_32F);

mLastFrame.GetRotationInverse().copyTo(LastTwc.rowRange(0,3).colRange(0,3));

mLastFrame.GetCameraCenter().copyTo(LastTwc.rowRange(0,3).col(3));

mVelocity = mCurrentFrame.mTcw*LastTwc;

}

else

mVelocity = cv::Mat();

mpMapDrawer->SetCurrentCameraPose(mCurrentFrame.mTcw);

//清除VO生成的临时性MapPoints

// Clean VO matches

for(int i=0; i<mCurrentFrame.N; i++)

{

MapPoint* pMP = mCurrentFrame.mvpMapPoints[i];

if(pMP)

if(pMP->Observations()<1)

{

mCurrentFrame.mvbOutlier[i] = false;

mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL);

}

}

// Delete temporal MapPoints

for(list<MapPoint*>::iterator lit = mlpTemporalPoints.begin(), lend = mlpTemporalPoints.end(); lit!=lend; lit++)

{

MapPoint* pMP = *lit;

delete pMP;

}

mlpTemporalPoints.clear();

//判断是否需要插入关键帧

// Check if we need to insert a new keyframe

if(NeedNewKeyFrame())

CreateNewKeyFrame();

// We allow points with high innovation (considererd outliers by the Huber Function)

// pass to the new keyframe, so that bundle adjustment will finally decide

// if they are outliers or not. We don't want next frame to estimate its position

// with those points so we discard them in the frame.

for(int i=0; i<mCurrentFrame.N;i++)

{

if(mCurrentFrame.mvpMapPoints[i] && mCurrentFrame.mvbOutlier[i])

mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL);

}

}

// Reset if the camera get lost soon after initialization

if(mState==LOST)

{

if(mpMap->KeyFramesInMap()<=5)

{

cout << "Track lost soon after initialisation, reseting..." << endl;

mpSystem->Reset();

return;

}

}

if(!mCurrentFrame.mpReferenceKF)

mCurrentFrame.mpReferenceKF = mpReferenceKF;

mLastFrame = Frame(mCurrentFrame);

}

//存储相机位姿等信息,用于生成相机轨迹

// Store frame pose information to retrieve the complete camera trajectory afterwards.

if(!mCurrentFrame.mTcw.empty())

{

cv::Mat Tcr = mCurrentFrame.mTcw*mCurrentFrame.mpReferenceKF->GetPoseInverse();

mlRelativeFramePoses.push_back(Tcr);

mlpReferences.push_back(mpReferenceKF);

mlFrameTimes.push_back(mCurrentFrame.mTimeStamp);

mlbLost.push_back(mState==LOST);

}

else

{

// This can happen if tracking is lost

mlRelativeFramePoses.push_back(mlRelativeFramePoses.back());

mlpReferences.push_back(mlpReferences.back());

mlFrameTimes.push_back(mlFrameTimes.back());

mlbLost.push_back(mState==LOST);

}

}

其程序框图如下:

Tracking线程中,状态变量mState非常重要,用来确定系统的状态

若mState==NOT_INITIALIZED,则调用相应的相机初始化函数进行初始化,注意双目和RGBD相机都是StereoInitialization( )函数

初始化完成后系统进入正常的运行阶段

需要注意:

ORB-SLAM2 系统有两种模式(可以手动切换),以 mbOnlyTracking 变量进行区分:

- SLAM模型:所有线程都正常工作

- Localization模式:只有Tracking线程工作,其它线程均不工作

Localization 模式中也有两种情况(系统自动判定,根据当前跟踪情况自动切换),以 mbVO 变量进行区分:

- VO 情况:Visual Odometry

- 正常情况:常规,所有部分正常运行

Tracking线程按代码主要分为三大块:

当前帧位姿估计

如果是SLAM模式,首先根据mState判断系统之前的跟踪状态

如果之前跟踪丢失,则要不断进行重定位Tracking:Relocalization( ),直到当前帧与KF Database中的某个KF匹配上了

如果之前跟踪正常,则继续跟踪,一般来说使用Tracking:TrackWithMotionMode( )进行估计,但如果运动模型还未建立,或者刚刚进行了重定位,则使用Tracking:TrackReferenceKeyFrame( )进行估计

TrackReferenceKeyFrame( )指当前帧和其Reference KF进行匹配来估计位姿,其匹配的搜索量会大很多,所以当Tracking:TrackWithMotionMode( )不行的时候才会用

如果是 Localization 模式,那么如果之前系统跟踪丢失,同样不断进行重定位Tracking::Relocalization( )

如果之前系统跟踪正常,与 SLAM 模式不同的地方在于,其会判断当前处于 VO 情况还是正常情况

正常情况:与 SLAM 模式基本一致,根据运动模式是否已经建立而采用 Tracking::TrackWithMotionMode( ) 或 Tracking::Relocalization( )

VO 情况:与 SLAM 模式有区别,此时进行 Tracking::TrackWithMotionMode( ) 和 Tracking::Relocalization( ),优先使用 Relocalization( ) 的结果(此时重定位的结果更可靠一些),如果重定位失败,则采用 Tracking::TrackWithMotionMode( ) 继续跟踪直至丢失,如果重定位成功,则可以推出 VO 模型回到正常模式

运动模型估计与参考帧估计的区别:

- Tracking::TrackReferenceKeyFrame() 是根据 BoW 来在当前帧所有提取出的 FeaturePoints 和 Reference Frame 的 MapPoints 中进行匹配

- Tracking::TrackWithMotionMode() 中是有了位姿初值,所以可以根据该初值进行投影,将上一帧的 MapPoints 先投影至当前帧的一个大概区域,从而缩小了搜索的区域大小,减小了搜索量

局部地图跟踪

局部地图跟踪通过调用TrackLocalMap( )函数实现

SLAM模式下,只有当前帧位姿初值估计成功才会进行局部地图跟踪

Localization模式下,只有当前帧位姿初值估计成功且是非VO情况才会进行局部地图跟踪

在局部地图跟踪优化后,会判断优化的效果如何,如果效果可以的话,才会判断本次跟踪成功(当前帧位姿初值估计 + 局部地图跟踪 都成功才算成功),否则本次跟踪丢失

决定是否生成关键帧,并插入关键帧

如果上述两步都很成功的话,才判断是否插入关键帧,主要有以下几步:

- 更新运动模型

- 清除临时性的MapPoints

- 调用NeedNewKeyFrame( )判断是否需要插入关键帧

- 需要则调用CreateNewKeyFrame( )创建关键帧

当然如果刚初始化完没几帧就丢失了,说明初始化的质量不行,系统 Reset,重新初始化

若上述步骤都成功完成,未出现跟踪丢失的情况,则存储相机位姿等信息,用于生成相机轨迹

主要参考

ORB-SLAM2 论文&代码学习 —— Tracking 线程 - MingruiYu - 博客园 (cnblogs.com)

如有侵权,请联系删除