cartographer代码及论文分析

1. 基础

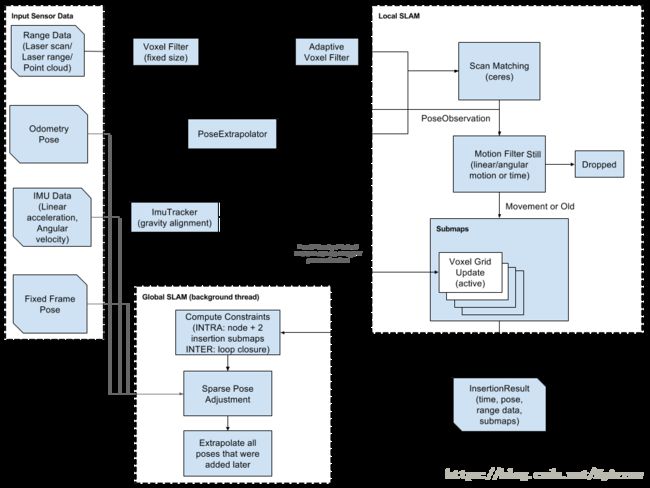

1.1 软件架构

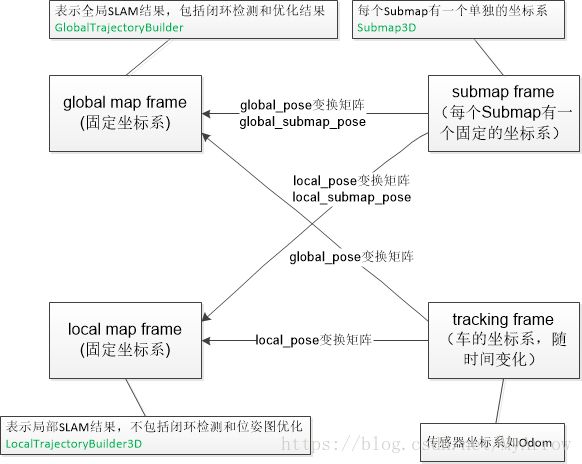

1.2 坐标系及相互关系

- 参考定义见:backpack_3d.lua

- Local map frame是一次slam过程中的原点。但是现在cartographer支持Incremental mapping。global map是整个地图的原点,local map是每一次建图的原点。

- map_frame = “map”:cartographer中使用的全局坐标系,最好保持默认,否则ROS的Rviz不认识其它的定义,导致无法可视化建图过程。

- tracking_frame=”base_link”:机器人中心坐标系,其它传感器数据都是以这个为基础进行插入的,它是整个SLAM系统的核心坐标系;cartographer_ros里面有个tf_bridge的类就是专门用来查询其它坐标系到此坐标系的转换关系。

- published_frame = “base_link”

- odom_frame = “odom” :published_frame与odom_frame配合使用,如果参数provide_odom_frame = true 那么最后可视化时,发布的转换消息是从 published_frame->odom_frame->map_frame, 也即cartographer内部计算出了未经回环检测的局部图坐标到优化后的全局图坐标之间的转换关系并发布了出来。在跑官网的二维背包例子时,在map坐标周围一直跳动的odom就是这个玩意。

1.3 ROS坐标系规范

-

ROS坐标系规范:REP (定义了坐标系命名规范及各自的含义)

-

base_link (机器人底盘坐标系)

- The coordinate frame called base_link is rigidly attached to the mobile robot base. The base_link can be attached to the base in any arbitrary position or orientation; for every hardware platform there will be a different place on the base that provides an obvious point of reference.

-

base_laser

- 激光雷达的坐标系,与激光雷达的安装点有关,其与base_link的tf为固定的。

-

odom (用于短期局部参考)

- The coordinate frame called odom is a world-fixed frame. The pose of a mobile platform in the odom frame can drift over time, without any bounds. This drift makes the odom frame useless as a long-term global reference. However, the pose of a robot in the odom frame is guaranteed to be continuous, meaning that the pose of a mobile platform in the odom frame always evolves in a smooth way, without discrete jumps.

- In a typical setup the odom frame is computed based on an odometry source, such as wheel odometry, visual odometry or an inertial measurement unit.

- The odom frame is useful as an accurate, short-term local reference, but drift makes it a poor frame for long-term reference.

-

map (用于长期全局参考)

- The coordinate frame called map is a world-fixed frame, with its Z-axis pointing upwards. The pose of a mobile platform, relative to the map frame, should not significantly drift over time. The map frame is not continuous, meaning the pose of a mobile platform in the map frame can change in discrete jumps at any time.

- In a typical setup, a localization component constantly re-computes the robot pose in the map frame based on sensor observations, therefore eliminating drift, but causing discrete jumps when new sensor information arrives.

- The map frame is useful as a long-term global reference, but discrete jumps in position estimators make it a poor reference frame for local sensing and acting.

-

earth (用于多个机器人的场景)

- The coordinate frame called earth is the origin of ECEF.

- This frame is designed to allow the interaction of multiple robots in different map frames. If the application only needs one map the earth coordinate frame is not expected to be present. In the case of running with multiple maps simultaneously the map and odom and base_link frames will need to be customized for each robot. If running multiple robots and bridging data between them, the transform frame_ids can remain standard on each robot if the other robots’ frame_ids are rewritten.

- If the map frame is globally referenced the publisher from earth to map can be a static transform publisher. Otherwise the earth to map transform will usually need to be computed by taking the estimate of the current global position and subtracting the current estimated pose in the map to get the estimated pose of the origin of the map.

- In case the map frame’s absolute positon is unknown at the time of startup, it can remain detached until such time that the global position estimation can be adaquately evaluated. This will operate in the same way that a robot can operate in the odom frame before localization in the map frame is initialized.

-

坐标系间的关系

- 每个坐标系只有一个父坐标系,可以有多个子坐标系

- 坐标系间的树形结构如下所示:

earth --> map --> odom --> base_link - 对于只有一个机器人的环境,在起始位置,map与odom坐标系是重合的。随着时间的推移是不重合的,而出现的偏差就是里程计的累积误差。那map–>odom的tf怎么得到?就是在一些校正传感器合作校正的package比如gmapping会给出一个位置估计(localization),这可以得到map–>base_link的tf,所以估计位置和里程计位置的偏差也就是odom与map的坐标系偏差。所以,如果你的odom计算没有错误,那么map–>odom的tf就是0.

-

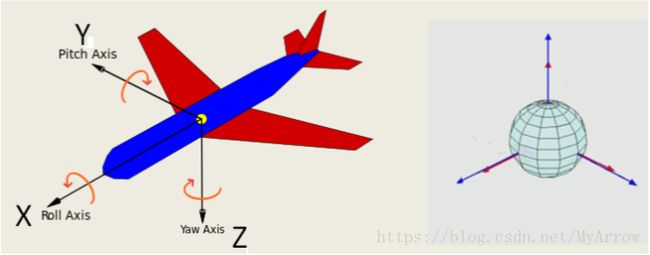

Axis Orientation (坐标轴方向)

1.4 概率(Probability)和赔率(Odds)

- 比较表

| 比较项 | ODDS(赔率) | PROBABILITY(概率) |

|---|---|---|

| Meaning | Odds refers to the chances in favor of the event to the chances against it. | Probability refers to the likelihood of occurrence of an event. |

| Expressed in | Ratio | Percent or decimal |

| Lies between | 0 to ∞ | 0 to 1 |

| Formula | Occurrence/Non-occurrence | Occurrence/Whole |

- 转换代码

// probability_values.h

// Clamps 'value' to be in the range ['min', 'max'].

template

T Clamp(const T value, const T min, const T max) {

if (value > max) {

return max;

}

if (value < min) {

return min;

}

return value;

}

inline uint16 BoundedFloatToValue(const float float_value,

const float lower_bound,

const float upper_bound) {

const int value =

common::RoundToInt(

(common::Clamp(float_value, lower_bound, upper_bound) - lower_bound) *

(32766.f / (upper_bound - lower_bound))) +

1;

// DCHECK for performance.

DCHECK_GE(value, 1);

DCHECK_LE(value, 32767);

return value;

}

} // namespace

inline float Odds(float probability) {

return probability / (1.f - probability);

}

inline float ProbabilityFromOdds(const float odds) {

return odds / (odds + 1.f);

}

// Converts the given probability to log odds.

inline float Logit(float probability) {

return std::log(probability / (1.f - probability));

}

const float kMaxLogOdds = Logit(kMaxProbability);

const float kMinLogOdds = Logit(kMinProbability);

// Converts a probability to a log odds integer. 0 means unknown, [kMinLogOdds,

// kMaxLogOdds] is mapped to [1, 255].

inline uint8 ProbabilityToLogOddsInteger(const float probability) {

const int value = common::RoundToInt((Logit(probability) - kMinLogOdds) *

254.f / (kMaxLogOdds - kMinLogOdds)) +

1;

CHECK_LE(1, value);

CHECK_GE(255, value);

return value;

}

inline float ProbabilityToCorrespondenceCost(const float probability) {

return 1.f - probability;

}

inline float CorrespondenceCostToProbability(const float correspondence_cost) {

return 1.f - correspondence_cost;

}

constexpr float kMinProbability = 0.1f;

constexpr float kMaxProbability = 1.f - kMinProbability;

constexpr float kMinCorrespondenceCost = 1.f - kMaxProbability;

constexpr float kMaxCorrespondenceCost = 1.f - kMinProbability;

// Clamps probability to be in the range [kMinProbability, kMaxProbability].

inline float ClampProbability(const float probability) {

return common::Clamp(probability, kMinProbability, kMaxProbability);

}

// Clamps correspondece cost to be in the range [kMinCorrespondenceCost,

// kMaxCorrespondenceCost].

inline float ClampCorrespondenceCost(const float correspondence_cost) {

return common::Clamp(correspondence_cost, kMinCorrespondenceCost,

kMaxCorrespondenceCost);

1.5 调试环境

-

安装 ROS Kinetic

http://wiki.ros.org/kinetic/Installation/Ubuntu -

Build and install proto3.

https://google-cartographer.readthedocs.io/en/latest/ (安装完之后重启系统)

或

https://github.com/google/protobuf/blob/master/src/README.md -

Cartographer ROS for TurtleBots (cartographer, cartographer_ros, cartographer_turtlebot)

https://google-cartographer-ros-for-turtlebots.readthedocs.io/en/latest/ -

下载TurtleBot3

cd ~/burger_ws/src/

git clone https://github.com/ROBOTIS-GIT/turtlebot3_msgs.git

git clone https://github.com/ROBOTIS-GIT/turtlebot3.git

cd ~/catkin_ws && catkin_make -

Public Data

http://google-cartographer-ros.readthedocs.io/en/latest/data.html#public-data -

Resource

-

Real-time indoor SLAM with glass detection

https://github.com/uts-magic-lab/slam_glass -

Cartographer Public Data

https://google-cartographer-ros.readthedocs.io/en/latest/data.html -

cartographer_hector_tracker

It is an example of 3D SLAM with a tilting 2D lidar on a mobile robot

https://github.com/tu-darmstadt-ros-pkg/cartographer_hector_tracker -

Bag validation tool

$ rosrun cartographer_ros cartographer_rosbag_validate -bag_filename ~/Downloads/2017-10-17-08-59-28.bag -

VeloView performs real-time visualization of live captured 3D LiDAR data from Velodyne’s HDL sensors (HDL-64E, HDL-32E, and VLP-16)

http://www.paraview.org/VeloView/

https://github.com/Kitware/VeloView

-

-

ROS Tutorials

2. Cartographer主要贡献

- 主要目的:减小计算闭环检测(Loop Closure)的资源消耗,以达到实时应用的目的,不追求高精度(可以达到r=5cm级别的精度)

- 主要思想: 通过闭环检测来消除构图过程中产生的累积误差,用于闭环检测的基本单元是Submap。

- 重点:

- 融合多传感器数据以创建局部Submap

- 用于闭环检测的Scan Matching策略的实现

3. 扫描匹配(Scan-Matching)

- ICP:Iterative Closest Point

- ICL:Iterative Closest Line

3.1 Scan-to-Submap

- 代码实现:

std::unique_ptr LocalTrajectoryBuilder2D::ScanMatch(

const common::Time time, const transform::Rigid2d& pose_prediction,

const sensor::RangeData& gravity_aligned_range_data) {

std::shared_ptr matching_submap =

active_submaps_.submaps().front();

// The online correlative scan matcher will refine the initial estimate for

// the Ceres scan matcher.

transform::Rigid2d initial_ceres_pose = pose_prediction;

sensor::AdaptiveVoxelFilter adaptive_voxel_filter(

options_.adaptive_voxel_filter_options());

// voxel filter

const sensor::PointCloud filtered_gravity_aligned_point_cloud =

adaptive_voxel_filter.Filter(gravity_aligned_range_data.returns);

if (filtered_gravity_aligned_point_cloud.empty()) {

return nullptr;

}

// RTCSM scan matching refine the pose_prediction that is from

// IMU/Odom/Kinetics for Ceres scan matcher

if (options_.use_online_correlative_scan_matching()) {

CHECK_EQ(options_.submaps_options().grid_options_2d().grid_type(),

proto::GridOptions2D_GridType_PROBABILITY_GRID);

double score = real_time_correlative_scan_matcher_.Match(

pose_prediction, filtered_gravity_aligned_point_cloud,

*static_cast(matching_submap->grid()),

&initial_ceres_pose);

kFastCorrelativeScanMatcherScoreMetric->Observe(score);

}

auto pose_observation = common::make_unique();

ceres::Solver::Summary summary;

// Ceres scan matcher (Non-linear Least Squares)

ceres_scan_matcher_.Match(pose_prediction.translation(), initial_ceres_pose,

filtered_gravity_aligned_point_cloud,

*matching_submap->grid(), pose_observation.get(),

&summary);

if (pose_observation) {

kCeresScanMatcherCostMetric->Observe(summary.final_cost);

double residual_distance =

(pose_observation->translation() - pose_prediction.translation())

.norm();

kScanMatcherResidualDistanceMetric->Observe(residual_distance);

double residual_angle = std::abs(pose_observation->rotation().angle() -

pose_prediction.rotation().angle());

kScanMatcherResidualAngleMetric->Observe(residual_angle);

}

return pose_observation;

}

3.1.1 RTCSM: Real-Time Correlative Scan Matching (by Olson)

- 方法:把求两次位姿间的刚体变换转换为一个概率问题:找到一个刚体变换(即机器人的新位姿)使用观测数据出现的概率最大。

- 方法贡献:提供了一种高效计算 p ( z ∣ x i , m ) p(z|x_i,m) p(z∣xi,m)的方法。

- 目标:基于机器人的当前Pose, 求后验概率分布

p ( x i ∣ x i − 1 , u , m , z } p(x_i|x_{i-1},u,m,z} p(xi∣xi−1,u,m,z}- x i − 1 x_{i-1} xi−1:前一个已求的Pose

- u u u:机器人的运动参数

- m m m: 环境模型(environment model)

- x x x:激光扫描点(laser scan observation)

- 目标简化:应用Bayes规则并删除不相关的条件

p ( x i ∣ x i − 1 , u , m , z } ∝ p ( z ∣ x i , m ) p ( x i ∣ x i − 1 , u ) p(x_i|x_{i-1},u,m,z}\propto p(z|x_i,m)p(x_i|x_{i-1},u) p(xi∣xi−1,u,m,z}∝p(z∣xi,m)p(xi∣xi−1,u)- p ( z ∣ x i , m ) p(z|x_i,m) p(z∣xi,m):观测模型(observation model),表示环境模型和机器人的Pose已知的情况下,我们有多大概率可以看到这些数据,其计算是复杂的,且有多个极值

- p ( x i ∣ x i − 1 , u ) p(x_i|x_{i-1},u) p(xi∣xi−1,u):运动模型(motion model),根据IMU/Odom或控制输入获得,是一个多变量高斯分布(multivariate Guassian Distribution)

- 方法输出:

- 更健壮的最大似然估计(robust maximum likelihood estimation)

- 不确定性估计(principled uncertainty estimate)

- 计算观测模型(假设Lidar 采样点 z j z_j zj是相互独立的)

p ( z ∣ x i , m ) = ∏ j p ( z j ∣ x i , m ) p(z|x_i,m) = \prod_j p(z_j|x_i,m) p(z∣xi,m)=j∏p(zj∣xi,m) - 加速计算 p ( z ∣ x i , m ) p(z|x_i,m) p(z∣xi,m):对于许多不同的候选 x i x_i xi,计算 p ( z ∣ x i , m ) p(z|x_i,m) p(z∣xi,m),从而找出最优的 x i x_i xi

- 多分辨率方法(Multi-Level Resolution Implementation)

- 第一步:创建两个map, 一个低分辨率(如30cm),一个高分辨率(如3cm)

- 第二步:在低分辨率的map中找到概率最大的区域

- 第三步:在高分辨率的map中的上面确定的区域内寻找最大值,这样确保是全局最大值,而不是局部极大值

- GPU加速计算

- 多分辨率方法(Multi-Level Resolution Implementation)

- 计算协方差:评估Scan Matcher的不确定性

- 当 x i x_i xi的最优值被估计之后,可以用多变量高斯分布来拟合这些数据,设 x i ( j ) x_i^{(j)} xi(j)是 x i x_i xi的第 j j j次估计

K = ∑ j x i ( j ) x i ( j ) T p ( x i ( j ) ∣ x i − 1 , u , m , z ) K=\sum_j x_i^{(j)}x_i^{(j)^T} p(x_i^{(j)} | x_{i-1}, u,m,z) K=j∑xi(j)xi(j)Tp(xi(j)∣xi−1,u,m,z)

u = ∑ j x i ( j ) p ( x i ( j ) ∣ x i − 1 , u , m , z ) u=\sum_j x_i^{(j)} p(x_i^{(j)} | x_{i-1}, u,m,z) u=j∑xi(j)p(xi(j)∣xi−1,u,m,z)

s = ∑ j p ( x i ( j ) ∣ x i − 1 , u , m , z ) s=\sum_j p(x_i^{(j)} | x_{i-1}, u,m,z) s=j∑p(xi(j)∣xi−1,u,m,z)

Σ x i = 1 s K − 1 s 2 u u T \Sigma_{x_i} = \frac{1}{s}K - \frac{1}{s^2}u \, u^T Σxi=s1K−s21uuT - 根据 p ( z ∣ x i , m ) p(z|x_i,m) p(z∣xi,m)估计Scan Matcher的不确定性主要考虑两个不确定性因素:

- 传感器本身的噪声

- 哪些查询点与map的哪部分相关的不确定性

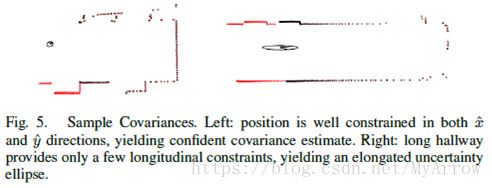

- 不确定性图形示例

- 当 x i x_i xi的最优值被估计之后,可以用多变量高斯分布来拟合这些数据,设 x i ( j ) x_i^{(j)} xi(j)是 x i x_i xi的第 j j j次估计

4. 关键流程

4.1 IMU消息处理流程

Node::HandleImuMessage->

SensorBridge::HandleImuMessage->

CollatedTrajectoryBuilder::AddSensorData->

CollatedTrajectoryBuilder::AddData->

TrajectoryCollator::AddSensorData->

//把传感器数据放在队列中

//TrajectoryCollator(std::unordered_map trajectory_to_queue_)

//trajectory_to_queue_.at(trajectory_id).Add(std::move(queue_key), std::move(data))

OrderedMultiQueue::Add(const QueueKey& queue_key, std::unique_ptr data)->

OrderedMultiQueue::Dispatch()->

//callback为CollatedTrajectoryBuilder::HandleCollatedSensorData

//见CollatedTrajectoryBuilder::CollatedTrajectoryBuilder

callback(..) ->

Dispatchable::AddToTrajectoryBuilder(TrajectoryBuilderInterface*)->

GlobalTracjectoryBuilder::addSensorData(sensor_id, Sensordata)

4.2 Laser Scan(2D)消息处理流程

- 包括Scan Matching、Insert Submap, 关键函数 LocalTrajectoryBuilder2D::AddAccumulatedRangeData。

GlobalTrajectoryBuilder::AddSensorData(const std::string& sensor_id, const sensor::TimedPointCloudData& timed_point_cloud_data)

std::unique_ptr LocalTrajectoryBuilder2D::AddRangeData(const std::string& sensor_id,const sensor::TimedPointCloudData& range_data)

TimedPointCloudOriginData RangeDataCollator::AddRangeData(string& sensor_id,TimedPointCloudData& timed_point_cloud_data)

//把一个点云数据加入到vector中,以搜集一帧数据

RangeData LocalTrajectoryBuilder2D::TransformToGravityAlignedFrameAndFilter

sensor::VoxelFilter(options_.voxel_filter_size()).Filter(cropped.returns)

MatchingResult LocalTrajectoryBuilder2D::AddAccumulatedRangeData(Time,RangeData&, Rigid3d& gravity_alignment) (***)

-Rigid3d PoseExtrapolator::ExtrapolatePose(const common::Time time) (得用IMU进行旋转、Odom进行平移预测,从而获得新的Pose)

-Rigid2d LocalTrajectoryBuilder2D::ScanMatch(Time time, Rigid2d& pose_prediction,RangeData& gravity_aligned_range_data) (****)

PointCloud AdaptiveVoxelFilter::Filter(const PointCloud& point_cloud) (体素滤波)

// Aligns 'point_cloud' within the 'grid' given an initial_pose_estimate'.

// Scan-to-Submap匹配 (RealTimeCorrelativeScanMatcher2D)

double RealTimeCorrelativeScanMatcher2D::Match(Rigid2d& initial_pose_estimate, //输入 @函数返回分数

PointCloud& point_cloud, //点云数据

ProbabilityGrid& probability_grid, //submap grid

Rigid2d* pose_estimate) //输出新的Pose

// Generates a collection of rotated scans.

vector GenerateRotatedScans(PointCloud& point_cloud,SearchParameters& search_parameters)

// Translates and discretizes the rotated scans into a vector of integer indices.

vector DiscretizeScans(MapLimits& map_limits,vector& scans,Translation2f& initial_translation)

// 在搜索范围内均匀生成候选者

vector RealTimeCorrelativeScanMatcher2D::GenerateExhaustiveSearchCandidates(SearchParameters& search_parameters)

//为每个候选者打分(Computes the score for each Candidate2D in a collection. The cost is computed as the sum of probabilities,)

void RealTimeCorrelativeScanMatcher2D::ScoreCandidates(ProbabilityGrid& probability_grid,

vector& discrete_scans,

SearchParameters& search_parameters,

vector* const candidates)

选一个分数最高的候选者Pose返回

// 创建三个ResidualBlock<空间占用、平移、旋转>然后利用ceres求解最优pose_estimate

// 根据Odom预测值和RTCSM估计值的残差进行优化求解

void CeresScanMatcher2D::Match(Eigen::Vector2d& target_translation, //通过IMU, Odom运动预测的值

transform::Rigid2d& initial_pose_estimate, //RTCSM所估计的最佳值 (scan-to-map)

sensor::PointCloud& point_cloud,

Grid2D& grid,

transform::Rigid2d* const pose_estimate, //优化的输出Pose结果

ceres::Solver::Summary* const summary)

//基于一个pose,计算从'point_cloud'匹配到'grid'的cost, 残差个数与点云个数一致

CostFunction* OccupiedSpaceCostFunction2D::CreateAutoDiffCostFunction

//计算 the cost of translating 'pose' to 'target_translation', 两个残差(x,y)

CostFunction* TranslationDeltaCostFunctor2D::CreateAutoDiffCostFunction

//计算 the cost of rotating 'pose' to 'target_angle',一个残差(偏航角之差)

CostFunction* RotationDeltaCostFunctor2D::CreateAutoDiffCostFunction

-void PoseExtrapolator::AddPose(const common::Time time,const transform::Rigid3d& pose)

-std::unique_ptrLocalTrajectoryBuilder2D::InsertIntoSubmap( //***把Laser Scan插入submap

const common::Time time, const sensor::RangeData& range_data_in_local,

const sensor::RangeData& gravity_aligned_range_data,

const transform::Rigid3d& pose_estimate,

const Eigen::Quaterniond& gravity_alignment)

void ActiveSubmaps2D::InsertRangeData(const sensor::RangeData& range_data)

void Submap2D::InsertRangeData(RangeData& range_data,RangeDataInserterInterface* range_data_inserter)

void ProbabilityGridRangeDataInserter2D::Insert(RangeData& range_data, GridInterface* const grid) // grid is ProbabilityGrid

void CastRays(RangeData& range_data,vector& hit_table,vector& miss_table,

insert_free_space,ProbabilityGrid* const probability_grid)

4.3 Laser Scan(3D)消息处理流程

GlobalTrajectoryBuilder::AddSensorData( string& sensor_id,sensor::TimedPointCloudData& timed_point_cloud_data)->

*LocalTrajectoryBuilder3D::AddRangeData(string& sensor_id,sensor::TimedPointCloudData& unsynchronized_data)->

TimedPointCloudOriginData RangeDataCollator::AddRangeData(string& sensor_id,sensor::TimedPointCloudData& timed_point_cloud_data)

LocalTrajectoryBuilder3D::AddAccumulatedRangeData(Time time, sensor::RangeData& filtered_range_data_in_tracking)-> (***)

Rigid3d PoseExtrapolator::ExtrapolatePose(const common::Time time)

float RealTimeCorrelativeScanMatcher3D::Match(const transform::Rigid3d& initial_pose_estimate,const sensor::PointCloud& point_cloud,

const HybridGrid& hybrid_grid,transform::Rigid3d* pose_estimate) // 暴力匹配

void CeresScanMatcher3D::Match(const Eigen::Vector3d& target_translation, const transform::Rigid3d& initial_pose_estimate,

const std::vector& point_clouds_and_hybrid_grids,

transform::Rigid3d* const pose_estimate, ceres::Solver::Summary* const summary)

// 每个点云数据有两个残差:高低精度(点云->地图),根据概率进行计算

// 点云残差=scaling_factor * (1-probability)

// 平移3个残差,旋转3个残差

void PoseExtrapolator::AddPose(const common::Time time,const transform::Rigid3d& pose) // 把新的Pose加入双向队列

std::unique_ptr LocalTrajectoryBuilder3D::InsertIntoSubmap(

const common::Time time,

const sensor::RangeData& filtered_range_data_in_local,

const sensor::RangeData& filtered_range_data_in_tracking,

const sensor::PointCloud& high_resolution_point_cloud_in_tracking,

const sensor::PointCloud& low_resolution_point_cloud_in_tracking,

const transform::Rigid3d& pose_estimate,

const Eigen::Quaterniond& gravity_alignment)->

void ActiveSubmaps3D::InsertRangeData(sensor::RangeData& range_data,Eigen::Quaterniond& gravity_alignment)->

void Submap3D::InsertRangeData(const sensor::RangeData& range_data,RangeDataInserter3D& range_data_inserter,

const int high_resolution_max_range)->

void RangeDataInserter3D::Insert(const sensor::RangeData& range_data,HybridGrid* hybrid_grid)->

// 调用两次,分别把RangeData插入高/低精度HybridGrid

bool ApplyLookupTable(const Eigen::Array3i& index, const std::vector& table)

// 通过查表方法更新Cell的概率值[1,32767],与概率对应的值(即归一化值)

*NodeId PoseGraph3D::AddNode(std::shared_ptr constant_data, const int trajectory_id,

std::vector>& insertion_submaps)->

PoseGraphData.Append //1) 为此Trajectory增加TrajectoryNode

PoseGraphData.Append //2) 如果对应的Submap3D没有被增加到轨迹中,则增加到轨迹中

*PoseGraph3D::ComputeConstraintsForNode(NodeId& node_id,vector> insertion_submaps,

bool newly_finished_submap) //3) 为新增加的节点计算约束

vector PoseGraph3D::InitializeGlobalSubmapPoses(...)-> // 获取新插入的两个Submap的SubMapId

OptimizationProblem3D::AddSubmap(int trajectory_id, transform::Rigid3d& global_submap_pose) //把Submap的全局位姿增加到优化器中

根据新节点的局部位姿计算其全局位姿

OptimizationProblem3D::AddTrajectoryNode(int trajectory_id,NodeSpec3D& node_data) // 把新Node的局部和全局位姿增加到优化器中

PoseGraphData.constraints.push_back(..) //计算新节点与每个插入子图(2个)间的约束变换,然后增加到约束列表中

PoseGraph3D::ComputeConstraint(NodeId& node_id,SubmapId& submap_id) //计算新节点与以前每个Submap的约束变换

ConstraintBuilder3D::MaybeAddConstraint(...)

ConstraintBuilder3D::ComputeConstraint(...) //计算(submap i <- node j) 的约束变换

unique_ptrFastCorrelativeScanMatcher3D::Match(...)

或

unique_ptr FastCorrelativeScanMatcher3D::Match(...)->

unique_ptrFastCorrelativeScanMatcher3D::MatchWithSearchParameters(...)->

Candidate3D FastCorrelativeScanMatcher3D::BranchAndBound(...)

CeresScanMatcher3D::Match(...)

或

ConstraintBuilder3D::MaybeAddGlobalConstraint(...)

PoseGraph3D::ComputeConstraintsForOldNodes(const SubmapId& submap_id) //计算以前加入的Nodes与新加入的Submap间的约束

PoseGraph3D::DispatchOptimization() // 闭环之后,当节点增加到90 (optimize_every_n_nodes in pose_graph.lua),

4.3.1 FastCorrelativeScanMatcher3D::MatchFullSubmap

- 输入:

- Node(LaserScan)在global frame中的旋转

- Submap在global frame中的旋转

- Node的点云数据

- 输出:匹配结果

struct Result {

float score;

transform::Rigid3d pose_estimate;

float rotational_score;

float low_resolution_score;

};

- 功能:在指定的立方体内搜索得分最高的位姿(存放在Result.pose_estimate)中

- 搜索立方体的边长:设定边长的一半 + 点云中的最远点的距离

- 每个体素的搜索角度:180度

struct SearchParameters {

const int linear_xy_window_size; // voxels

const int linear_z_window_size; // voxels

const double angular_search_window; // radians

const MatchingFunction* const low_resolution_matcher;

};

const int linear_window_size =

(width_in_voxels_ + 1) / 2 +

common::RoundToInt(max_point_distance / resolution_ + 0.5f);

const auto low_resolution_matcher = scan_matching::CreateLowResolutionMatcher(

low_resolution_hybrid_grid_, &constant_data.low_resolution_point_cloud);

const SearchParameters search_parameters{

linear_window_size, linear_window_size, M_PI, &low_resolution_matcher};

- 执行搜索:FastCorrelativeScanMatcher3D::MatchWithSearchParameters

4.3.2 FastCorrelativeScanMatcher3D::MatchWithSearchParameters

- 输入:

- 搜索空间

- Node(LaserScan)在global frame中的旋转

- Submap在global frame中的旋转

- Node的点云数据

- 统计直方图

- 重力方向

- 最小分

- 输出:匹配结果

- 功能:

- 生成离散的3D扫描点:GenerateDiscreteScans

struct DiscreteScan3D {

transform::Rigid3f pose; // 此点云对应Node的位姿

// Contains a vector of discretized scans for each 'depth'.

std::vector> cell_indices_per_depth;

float rotational_score; //此角度旋转匹配得分,通过匹配统计直方图而得(向量点乘)

};

- 生成Candidate3D: ComputeLowestResolutionCandidates

struct Candidate3D {

Candidate3D(const int scan_index, const Eigen::Array3i& offset)

: scan_index(scan_index), offset(offset) {}

static Candidate3D Unsuccessful() {

return Candidate3D(0, Eigen::Array3i::Zero());

}

// Index into the discrete scans vectors.

// 对应搜索角度

int scan_index;

// Linear offset from the initial pose in cell indices. For lower resolution

// candidates this is the lowest offset of the 2^depth x 2^depth x 2^depth

// block of possibilities.

Eigen::Array3i offset; // 对应空间搜索位置索引

// Score, higher is better.

float score = -std::numeric_limits::infinity();

// Score of the low resolution matcher.

float low_resolution_score = 0.f;

bool operator<(const Candidate3D& other) const { return score < other.score; }

bool operator>(const Candidate3D& other) const { return score > other.score; }

};

- a

- a

- a

4.3.2 FastCorrelativeScanMatcher3D::GenerateDiscreteScans

- 输出:std::vector< DiscreteScan3D>

- 功能:

- 根据点云中最远点的距离及搜索角度窗口,计算每个需要尝试匹配的角度

- 把节点的全局坐标转换成submap的局部坐标

- 把Node(LaserScan)直方图与Submap直方图进行匹配,每个角度都进行一次匹配并得到一个分数

// 计算每个搜索角度的匹配得分

std::vector RotationalScanMatcher::Match(Eigen::VectorXf& histogram,float initial_angle,std::vector& angles) ->

// 按角度旋转直方图

Eigen::VectorXf RotateHistogram(const Eigen::VectorXf& histogram,const float angle)

// 通过点乘计算两个直方图的相似性,越相似,分数越高

float MatchHistograms(Eigen::VectorXf& submap_histogram, Eigen::VectorXf& scan_histogram)

- 根据每个搜索角度计算点云的新Pose, 再加上搜索参数、点云、此角度的匹配分数,生成一个DiscreteScan3D (每上搜索角度一个DiscreteScan3D), 实现函数:DiscreteScan3D FastCorrelativeScanMatcher3D::DiscretizeScan,从而输出std::vector

- 为每个搜索角度生成一个DiscreteScan3D

4.3.3 DiscreteScan3D FastCorrelativeScanMatcher3D::DiscretizeScan

- 输出:DiscreteScan3D

- 功能:

- PrecomputationGrid3D是HybridGrid的8位值版本,PrecomputationGrid3D使用8位表示概率值,而HybridGrid使用16位值表示概率

- 根据全分辨率各低分辨率的数量(深度数),为每一层生成所有激光点在PrecomputationGrid3D中的位置索引

- 最后把点云的位姿、所有层的激光点索引、在此角度的旋转得分生成一个DiscreteScan3D

DiscreteScan3D{pose, cell_indices_per_depth, rotational_score};

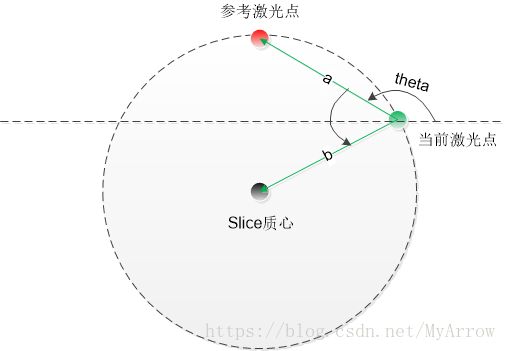

4.3.4 Eigen::VectorXf RotationalScanMatcher::ComputeHistogram

Eigen::VectorXf RotationalScanMatcher::ComputeHistogram(

const sensor::PointCloud& point_cloud, const int histogram_size)

- 功能:计算一个点云的直方图

- 直方图定义:

- bucket index:当前激光点到最后一个参考激光点的连线的斜率对应的角度的离散值

- bucket value:激光点的值的累加,此值定义为:“当前点与参考点的连线”与“当前点与质心点的连线”的同向程度,同向为0,垂直最大

- 直方图计算方法:

void AddPointCloudSliceToHistogram(const sensor::PointCloud& slice,

Eigen::VectorXf* const histogram) {

if (slice.empty()) {

return;

}

// We compute the angle of the ray from a point to the centroid of the whole

// point cloud. If it is orthogonal to the angle we compute between points, we

// will add the angle between points to the histogram with the maximum weight.

// This is to reject, e.g., the angles observed on the ceiling and floor.

const Eigen::Vector3f centroid = ComputeCentroid(slice);

Eigen::Vector3f last_point = slice.front();

for (const Eigen::Vector3f& point : slice) {

const Eigen::Vector2f delta = (point - last_point).head<2>();

const Eigen::Vector2f direction = (point - centroid).head<2>();

const float distance = delta.norm();

if (distance < kMinDistance || direction.norm() < kMinDistance) {

continue;

}

if (distance > kMaxDistance) {

last_point = point;

continue;

}

const float angle = common::atan2(delta);

const float value = std::max(

0.f, 1.f - std::abs(delta.normalized().dot(direction.normalized())));

AddValueToHistogram(angle, value, histogram);

}

}

4.3.5 FastCorrelativeScanMatcher3D::ComputeLowestResolutionCandidates

- 输出:std::vector< Candidate3D>

- a

- a

5. 基础工具

5.1 Protobuf

- Protocol Buffers是Google出品并开源的语言和平台均中立的数据序列化和反序列化工具,使用步骤:

- 定义.proto文件

- 生成Protobuf编译器protoc编译.proto文件生成*.pb.h和*.pb.cc

- 写应用程序并包含*.pb.h,然后进行编译即可

- 示例.proto文件

syntax = "proto3";

package testx; #对应namespace

message Person { #对应class name

string name = 1;

int32 id = 2;

string email = 3;

}

- 示例CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(test)

find_package(protobuf CONFIG REQUIRED)

set(CMAKE_INCLUDE_CURRENT_DIR TRUE)

#find_package(Ceres REQUIRED)

include_directories(${PROTOBUF_INCLUDE_DIRS})

# test

add_executable(test test.cc test.pb.cc)

target_link_libraries(test protobuf::libprotobuf)

5. TurtleBot3-Burger + Cartographer

5.1 解决IMU和Odom Timestamp不一致的问题

- 使用系统当前时间替换IMU和Odom的Timestamp

- time_conversion.h

// cartographer_ros/cartographer_ros/time_conversion.h

::cartographer::common::Time FromRosNow();

- time_conversion.cc

// To improve Odom, IMU time inconsistent

// cartographer_ros/cartographer_ros/time_conversion.cc

::cartographer::common::Time FromRosNow(){

const std::chrono::nanoseconds now =

std::chrono::duration_cast(

std::chrono::system_clock::now().time_since_epoch());

::ros::Time rosTime;

rosTime.sec = now.count()/1000000000;

rosTime.nsec = now.count()%1000000000;

return FromRos(rosTime);

}

- 代码修改

// cartographer_ros/cartographer_ros/msg_conversion.cc

// Func: LaserScanToPointCloudWithIntensities(const LaserMessageType& msg)

// ::cartographer::common::Time timestamp = FromRos(msg.header.stamp);

::cartographer::common::Time timestamp = FromRosNow();

// cartographer_ros/cartographer_ros/sensor_bridge.cc

// Func: SensorBridge::SensorBridge

// const carto::common::Time time = FromRos(msg->header.stamp);

// const auto sensor_to_tracking = tf_bridge_.LookupToTracking(

// time, CheckNoLeadingSlash(msg->child_frame_id));

const carto::common::Time time = FromRosNow();

const auto sensor_to_tracking = tf_bridge_.LookupToTracking(

time, CheckNoLeadingSlash(msg->header.frame_id));

// cartographer_ros/cartographer_ros/sensor_bridge.cc

// Func: SensorBridge::ToImuData

//const carto::common::Time time = FromRos(msg->header.stamp);

const carto::common::Time time = FromRosNow();

5.2 删除告警

- TfBridge::LookupToTracking

// cartographer_ros/cartographer_ros/tf_bridge.cc

// return ::cartographer::common::make_unique<

// ::cartographer::transform::Rigid3d>(ToRigid3d(buffer_->lookupTransform(

// tracking_frame_, frame_id, requested_time, timeout)));

return ::cartographer::common::make_unique<

::cartographer::transform::Rigid3d>(ToRigid3d(buffer_->lookupTransform(

tracking_frame_, frame_id, ::ros::Time(0.), timeout)));

5.3 把Laser Scan转换为PointCloud

- LaserScanToPointCloudWithIntensities

// Check laser scan data (msg_conversion.cc)

LOG(ERROR) << "range_min=" << msg.range_min << ", range_max=" << msg.range_max \

<< ", angle_min=" << msg.angle_min << ", angle_max=" << msg.angle_max \

<< ", angle_increment=" << msg.angle_increment << ", msg.ranges.size=" << msg.ranges.size() \

<< ", frame_id=" << msg.header.frame_id << ", scan_time=" << msg.scan_time \

<< ", time_increment=" << msg.time_increment;

5.4 3D截取匹配的数据

std::unique_ptr

LocalTrajectoryBuilder3D::AddRangeData(

const std::string& sensor_id,

const sensor::TimedPointCloudData& unsynchronized_data)

5.5 2D截取匹配的数据

template

std::tuple

LaserScanToPointCloudWithIntensities(const LaserMessageType& msg)

6. 关键元素

6.1 体素滤波器(Voxel Filter)

- adaptive_voxel_filter (自适应体素滤波器)

- 用于为Scan Matching计算稀疏点云(sparser point cloud)

- 为了找到满足要求的点云数据,其体素(Voxel)的边长可变。

- 最大边长:因为是自适应滤波,首先用最大的体素边长max_length尝试,滤波之后的点数是否大于min_num_points, 如果大于返回结果;否则边长减半并进行滤波,直至找到大于min_num_points为止。(注:一般有1万多个点云数据,经过体素滤波之后只有200多个点云数据用于匹配)

- 代码参见:sensor/internal/voxel_filter.cc

- 自适应体素滤波器的参数:

max_length = 2., // 最大边长

min_num_points = 150, // 点云需要保留的最小点数

max_range = 15., // 点云的最大距离,距离(l2范数)大于此值的点云数据直接丢弃

- loop_closure_adaptive_voxel_filter(闭环自适应体素滤波器)

- 用于为闭环检测计算稀疏点云(sparser point cloud)

7. 图的颜色太暗

- constexpr float kMinProbability = 0.2f; probability_values.h