VS2013+PCL1.8.0+Opencv2.4.9+Kinect V2 点云的获取与显示

一、PCL 1.8.0 相关文件下载:

先到百度网盘下载安装和配置文件,开始成功配置了X64的,后来为了和自己32位的opencv匹配,改为了win32版本的;

属性表和相应的pdb.rar都下载下来。 https://pan.baidu.com/s/1Z2IGOAmizWfDa9Wt1mE9Gg

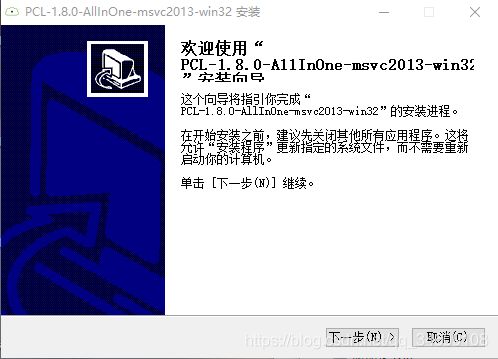

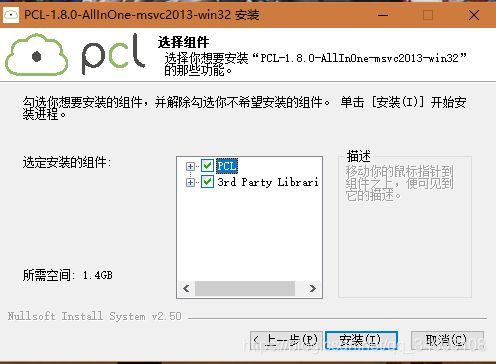

二、点击 PCL-1.8.0-AllInOne-msvc2013-win32.exe 运行

点击下一步、我接受、

add pcl to the system PATH for all users

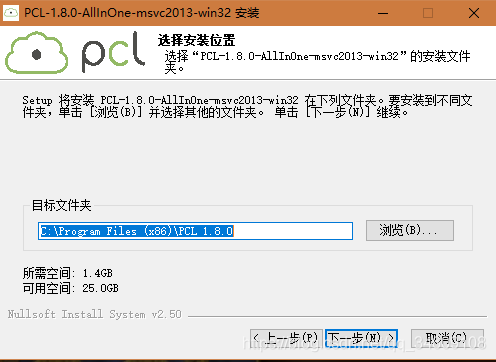

选择安装位置,默认安装位置即可

点击安装完成,需要等几分钟,安装过程中会弹出安装openni的界面,这时一定要把安装路径改为

C:\Program Files (x86)\PCL 1.8.0\3rdParty\OpenNI2

三: 配置

step1 : 拷贝与你安装PCL版本对应的PDB压缩包解压后的PDB文件,到你PCL安装路径下的bin文件夹

也就是将,PCL-1.8.0-AllInOne-msvc2013-win32-pdb.rar 解压到 C:\Program Files (x86)\PCL 1.8.0\bin 目录下

step2 :更改环境变量,电脑右击属性-》高级系统设置-》环境变量-》path-》

添加如下环境变量:配置好环境变量后需要重启一下电脑

C:\Program Files (x86)\PCL 1.8.0\bin (这条如果有,就不需要再添加)

C:\Program Files (x86)\PCL 1.8.0\3rdParty\FLANN\bin

C:\Program Files (x86)\PCL 1.8.0\3rdParty\VTK\bin

C:\Program Files (x86)\PCL 1.8.0\3rdParty\Qhull\bin

C:\Program Files (x86)\PCL 1.8.0\3rdParty\OpenNI2\Tools

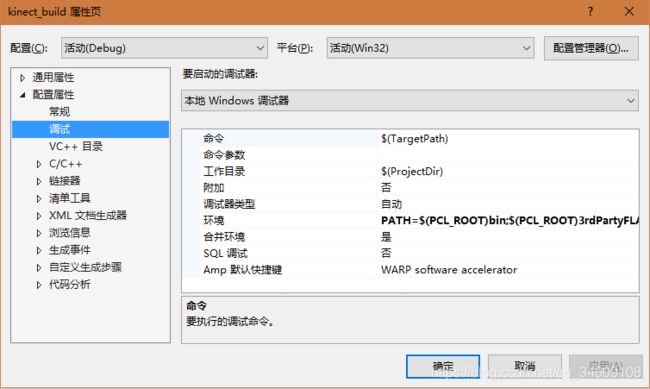

step3 :新建一个visual studio 空项目。右击xxx项目的属性

在调试下的,环境添加 : PATH=$(PCL_ROOT)bin;$(PCL_ROOT)3rdPartyFLANNbin;$(PCL_ROOT)3rdPartyVTKbin;$(PCL_ROOT)Qhullbin;$(PCL_ROOT)3rdPartyOpenNI2Tools;$(PATH)

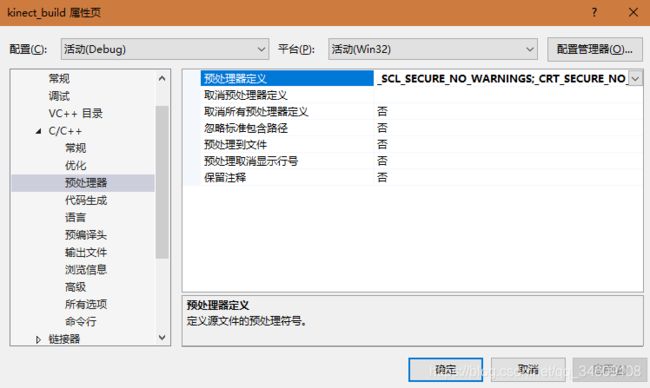

点击 c/c++ 下 预处理器添加 : _SCL_SECURE_NO_WARNINGS 和 _CRT_SECURE_NO_WARNINGS

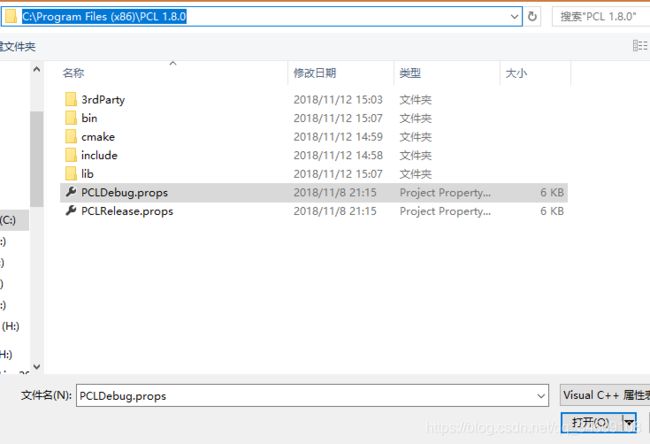

step4 : 将下载属性表里的pclDebug.props和pclRelease.props 放在如下位置

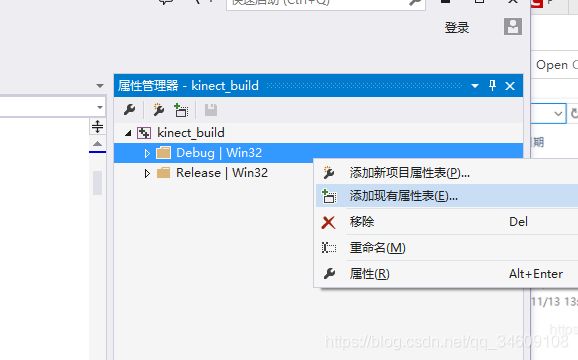

点击vs项目的属性管理器 :

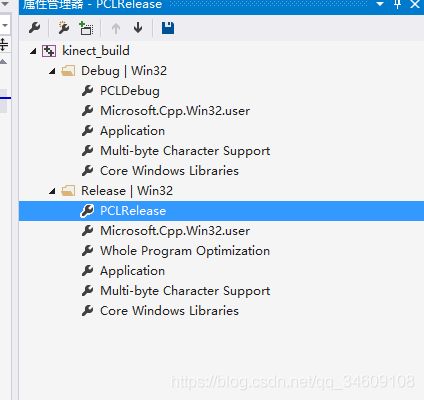

debug|win32右击,添加现有属性表:

添加进去,同理添加pclRelease.props 到release|win32 下 :配置好如图 :

三 : 如上,pcl 32 位就配置好了, 如需64位差不多的操作,接下来验证一下,配置结果,解决资源方案管理器下新建一个main.cpp 复制如下代码 :

#include

#include

#include

#include

int user_data;

void viewerOneOff(pcl::visualization::PCLVisualizer& viewer)

{

viewer.setBackgroundColor(1.0, 0.5, 1.0);

pcl::PointXYZ o;

o.x = 1.0;

o.y = 0;

o.z = 0;

viewer.addSphere(o, 0.25, "sphere", 0);

std::cout << "i only run once" << std::endl;

}

void viewerPsycho(pcl::visualization::PCLVisualizer& viewer)

{

static unsigned count = 0;

std::stringstream ss;

ss << "Once per viewer loop: " << count++;

viewer.removeShape("text", 0);

viewer.addText(ss.str(), 200, 300, "text", 0);

//FIXME: possible race condition here:

user_data++;

}

int main()

{

pcl::PointCloud::Ptr cloud(new pcl::PointCloud);

pcl::io::loadPCDFile("example.pcd的位置", *cloud);

pcl::visualization::CloudViewer viewer("Cloud Viewer");

//blocks until the cloud is actually rendered

viewer.showCloud(cloud);

//use the following functions to get access to the underlying more advanced/powerful

//PCLVisualizer

//This will only get called once

viewer.runOnVisualizationThreadOnce(viewerOneOff);

//This will get called once per visualization iteration

viewer.runOnVisualizationThread(viewerPsycho);

while (!viewer.wasStopped())

{

//you can also do cool processing here

//FIXME: Note that this is running in a separate thread from viewerPsycho

//and you should guard against race conditions yourself...

user_data++;

}

return 0;

}

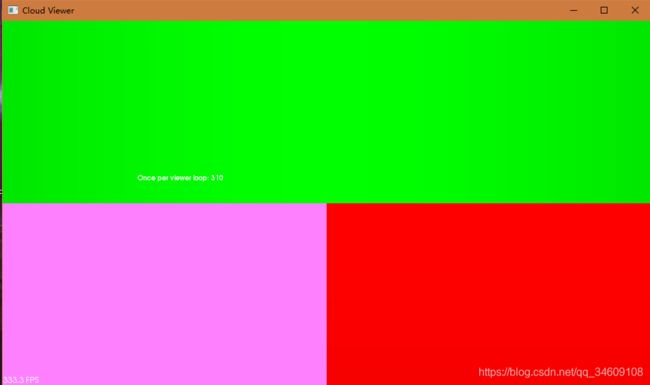

点击运行,得出如下效果;

使用鼠标滚轮进行放大缩小可得 :

四 : opencv 2.4.9 安装配置,这个我写过博客,可去查看,也需要修改环境变量. 这里博主安装在了D盘 ;

环境变量:

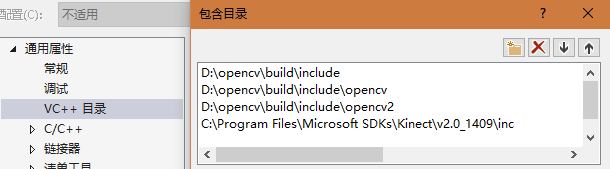

下载安装 kinect v2 的sdk , 然后配置属性,vc++ 目录配置,包含目录下:

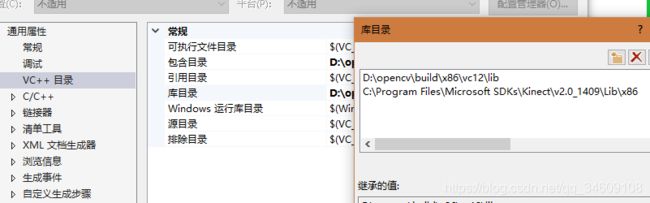

库目录下添加opencv 和 kinect 的库

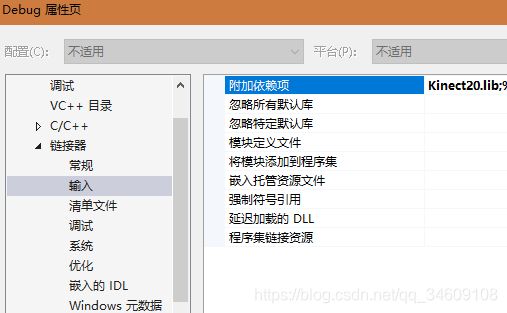

链接器输入下添加 :

Kinect20.lib

opencv_ml249d.lib

opencv_calib3d249d.lib

opencv_contrib249d.lib

opencv_core249d.lib

opencv_features2d249d.lib

opencv_flann249d.lib

opencv_gpu249d.lib

opencv_highgui249d.lib

opencv_imgproc249d.lib

opencv_legacy249d.lib

opencv_objdetect249d.lib

opencv_ts249d.lib

opencv_video249d.lib

opencv_nonfree249d.lib

opencv_ocl249d.lib

opencv_photo249d.lib

opencv_stitching249d.lib

opencv_superres249d.lib

opencv_videostab249d.lib

opencv_objdetect249.lib

opencv_ts249.lib

opencv_video249.lib

opencv_nonfree249.lib

opencv_ocl249.lib

opencv_photo249.lib

opencv_stitching249.lib

opencv_superres249.lib

opencv_videostab249.lib

opencv_calib3d249.lib

opencv_contrib249.lib

opencv_core249.lib

opencv_features2d249.lib

opencv_flann249.lib

opencv_gpu249.lib

opencv_highgui249.lib

opencv_imgproc249.lib

opencv_legacy249.lib

opencv_ml249.lib

五 、 所以环境已经配好 ,现在开始Kinect V2 点云的获取与保存:

运行结果如图:

main.cpp

// Disable Error C4996 that occur when using Boost.Signals2.

#ifdef _DEBUG

#define _SCL_SECURE_NO_WARNINGS

#endif

#include "kinect_grabber.h"

#include

#include //PCL的PCD格式文件的输入输出头文件

#include //PCL对各种格式的点的支持头文件

#include

typedef pcl::PointXYZRGBA PointType;

int main(int argc, char* argv[])

{

int nnn = 0;

// PCL Visualizer

boost::shared_ptr viewer(

new pcl::visualization::PCLVisualizer("Point Cloud Viewer"));

viewer->setCameraPosition(0.0, 0.0, -2.5, 0.0, 0.0, 0.0);

// Point Cloud

pcl::PointCloud::ConstPtr cloud;

// Retrieved Point Cloud Callback Function

boost::mutex mutex;

boost::function::ConstPtr&)> function =

[&cloud, &mutex](const pcl::PointCloud::ConstPtr& ptr){

boost::mutex::scoped_lock lock(mutex);

/* Point Cloud Processing */

cloud = ptr->makeShared();

};

// Kinect2Grabber

boost::shared_ptr grabber = boost::make_shared();

// Register Callback Function

boost::signals2::connection connection = grabber->registerCallback(function);

// Start Grabber

grabber->start();

bool switchfd = true;

while (!viewer->wasStopped()){

// Update Viewer

viewer->spinOnce();

boost::mutex::scoped_try_lock lock(mutex);

if (lock.owns_lock() && cloud){

// Update Point Cloud

//cout << cloud->width << endl;

//cout << cloud->size() << endl;

//保存点云数据

if (nnn == 100)

{

cin >> nnn;

pcl::io::savePCDFileASCII("test_pcd.pcd", *cloud);

nnn = 0;

}

nnn++;

cout << "nnn: " << nnn << endl;

if (!viewer->updatePointCloud(cloud, "cloud")){

viewer->addPointCloud(cloud, "cloud");

}

}

}

// Stop Grabber

grabber->stop();

// Disconnect Callback Function

if (connection.connected()){

connection.disconnect();

}

return 0;

}

kinect_grabber.h

// Kinect2Grabber is pcl::Grabber to retrieve the point cloud data from Kinect v2 using Kinect for Windows SDK 2.x.

// This source code is licensed under the MIT license. Please see the License in License.txt.

#ifndef KINECT2_GRABBER

#define KINECT2_GRABBER

#define NOMINMAX

#include

#include

#include

#include

#include

#include

namespace pcl

{

struct pcl::PointXYZ;

struct pcl::PointXYZI;

struct pcl::PointXYZRGB;

struct pcl::PointXYZRGBA;

template class pcl::PointCloud;

template

inline void SafeRelease(Interface *& IRelease)

{

if (IRelease != NULL){

IRelease->Release();

IRelease = NULL;

}

}

class Kinect2Grabber : public pcl::Grabber

{

public:

Kinect2Grabber();

virtual ~Kinect2Grabber() throw ();

virtual void start();

virtual void stop();

virtual bool isRunning() const;

virtual std::string getName() const;

virtual float getFramesPerSecond() const;

typedef void (signal_Kinect2_PointXYZ)(const boost::shared_ptr>&);

typedef void (signal_Kinect2_PointXYZI)(const boost::shared_ptr>&);

typedef void (signal_Kinect2_PointXYZRGB)(const boost::shared_ptr>&);

typedef void (signal_Kinect2_PointXYZRGBA)(const boost::shared_ptr>&);

protected:

boost::signals2::signal* signal_PointXYZ;

boost::signals2::signal* signal_PointXYZI;

boost::signals2::signal* signal_PointXYZRGB;

boost::signals2::signal* signal_PointXYZRGBA;

pcl::PointCloud::Ptr convertDepthToPointXYZ(UINT16* depthBuffer);

pcl::PointCloud::Ptr convertInfraredDepthToPointXYZI(UINT16* infraredBuffer, UINT16* depthBuffer);

pcl::PointCloud::Ptr convertRGBDepthToPointXYZRGB(RGBQUAD* colorBuffer, UINT16* depthBuffer);

pcl::PointCloud::Ptr convertRGBADepthToPointXYZRGBA(RGBQUAD* colorBuffer, UINT16* depthBuffer);

boost::thread thread;

mutable boost::mutex mutex;

void threadFunction();

bool quit;

bool running;

HRESULT result;

IKinectSensor* sensor;

ICoordinateMapper* mapper;

IColorFrameSource* colorSource;

IColorFrameReader* colorReader;

IDepthFrameSource* depthSource;

IDepthFrameReader* depthReader;

IInfraredFrameSource* infraredSource;

IInfraredFrameReader* infraredReader;

int colorWidth;

int colorHeight;

std::vector colorBuffer;

int depthWidth;

int depthHeight;

std::vector depthBuffer;

int infraredWidth;

int infraredHeight;

std::vector infraredBuffer;

};

pcl::Kinect2Grabber::Kinect2Grabber()

: sensor(nullptr)

, mapper(nullptr)

, colorSource(nullptr)

, colorReader(nullptr)

, depthSource(nullptr)

, depthReader(nullptr)

, infraredSource(nullptr)

, infraredReader(nullptr)

, result(S_OK)

, colorWidth(1920)

, colorHeight(1080)

, colorBuffer()

, depthWidth(512)

, depthHeight(424)

, depthBuffer()

, infraredWidth(512)

, infraredHeight(424)

, infraredBuffer()

, running(false)

, quit(false)

, signal_PointXYZ(nullptr)

, signal_PointXYZI(nullptr)

, signal_PointXYZRGB(nullptr)

, signal_PointXYZRGBA(nullptr)

{

// Create Sensor Instance

result = GetDefaultKinectSensor(&sensor);

if (FAILED(result)){

throw std::exception("Exception : GetDefaultKinectSensor()");

}

// Open Sensor

result = sensor->Open();

if (FAILED(result)){

throw std::exception("Exception : IKinectSensor::Open()");

}

// Retrieved Coordinate Mapper

result = sensor->get_CoordinateMapper(&mapper);

if (FAILED(result)){

throw std::exception("Exception : IKinectSensor::get_CoordinateMapper()");

}

// Retrieved Color Frame Source

result = sensor->get_ColorFrameSource(&colorSource);

if (FAILED(result)){

throw std::exception("Exception : IKinectSensor::get_ColorFrameSource()");

}

// Retrieved Depth Frame Source

result = sensor->get_DepthFrameSource(&depthSource);

if (FAILED(result)){

throw std::exception("Exception : IKinectSensor::get_DepthFrameSource()");

}

// Retrieved Infrared Frame Source

result = sensor->get_InfraredFrameSource(&infraredSource);

if (FAILED(result)){

throw std::exception("Exception : IKinectSensor::get_InfraredFrameSource()");

}

// Retrieved Color Frame Size

IFrameDescription* colorDescription;

result = colorSource->get_FrameDescription(&colorDescription);

if (FAILED(result)){

throw std::exception("Exception : IColorFrameSource::get_FrameDescription()");

}

result = colorDescription->get_Width(&colorWidth); // 1920

if (FAILED(result)){

throw std::exception("Exception : IFrameDescription::get_Width()");

}

result = colorDescription->get_Height(&colorHeight); // 1080

if (FAILED(result)){

throw std::exception("Exception : IFrameDescription::get_Height()");

}

SafeRelease(colorDescription);

// To Reserve Color Frame Buffer

colorBuffer.resize(colorWidth * colorHeight);

// Retrieved Depth Frame Size

IFrameDescription* depthDescription;

result = depthSource->get_FrameDescription(&depthDescription);

if (FAILED(result)){

throw std::exception("Exception : IDepthFrameSource::get_FrameDescription()");

}

result = depthDescription->get_Width(&depthWidth); // 512

if (FAILED(result)){

throw std::exception("Exception : IFrameDescription::get_Width()");

}

result = depthDescription->get_Height(&depthHeight); // 424

if (FAILED(result)){

throw std::exception("Exception : IFrameDescription::get_Height()");

}

SafeRelease(depthDescription);

// To Reserve Depth Frame Buffer

depthBuffer.resize(depthWidth * depthHeight);

// Retrieved Infrared Frame Size

IFrameDescription* infraredDescription;

result = infraredSource->get_FrameDescription(&infraredDescription);

if (FAILED(result)){

throw std::exception("Exception : IInfraredFrameSource::get_FrameDescription()");

}

result = infraredDescription->get_Width(&infraredWidth); // 512

if (FAILED(result)){

throw std::exception("Exception : IFrameDescription::get_Width()");

}

result = infraredDescription->get_Height(&infraredHeight); // 424

if (FAILED(result)){

throw std::exception("Exception : IFrameDescription::get_Height()");

}

SafeRelease(infraredDescription);

// To Reserve Infrared Frame Buffer

infraredBuffer.resize(infraredWidth * infraredHeight);

signal_PointXYZ = createSignal();

signal_PointXYZI = createSignal();

signal_PointXYZRGB = createSignal();

signal_PointXYZRGBA = createSignal();

}

pcl::Kinect2Grabber::~Kinect2Grabber() throw()

{

stop();

disconnect_all_slots();

disconnect_all_slots();

disconnect_all_slots();

disconnect_all_slots();

thread.join();

// End Processing

if (sensor){

sensor->Close();

}

SafeRelease(sensor);

SafeRelease(mapper);

SafeRelease(colorSource);

SafeRelease(colorReader);

SafeRelease(depthSource);

SafeRelease(depthReader);

SafeRelease(infraredSource);

SafeRelease(infraredReader);

}

void pcl::Kinect2Grabber::start()

{

// Open Color Frame Reader

result = colorSource->OpenReader(&colorReader);

if (FAILED(result)){

throw std::exception("Exception : IColorFrameSource::OpenReader()");

}

// Open Depth Frame Reader

result = depthSource->OpenReader(&depthReader);

if (FAILED(result)){

throw std::exception("Exception : IDepthFrameSource::OpenReader()");

}

// Open Infrared Frame Reader

result = infraredSource->OpenReader(&infraredReader);

if (FAILED(result)){

throw std::exception("Exception : IInfraredFrameSource::OpenReader()");

}

running = true;

thread = boost::thread(&Kinect2Grabber::threadFunction, this);

}

void pcl::Kinect2Grabber::stop()

{

boost::unique_lock lock(mutex);

quit = true;

running = false;

lock.unlock();

}

bool pcl::Kinect2Grabber::isRunning() const

{

boost::unique_lock lock(mutex);

return running;

lock.unlock();

}

std::string pcl::Kinect2Grabber::getName() const

{

return std::string("Kinect2Grabber");

}

float pcl::Kinect2Grabber::getFramesPerSecond() const

{

return 30.0f;

}

void pcl::Kinect2Grabber::threadFunction()

{

while (!quit){

boost::unique_lock lock(mutex);

// Acquire Latest Color Frame

IColorFrame* colorFrame = nullptr;

result = colorReader->AcquireLatestFrame(&colorFrame);

if (SUCCEEDED(result)){

// Retrieved Color Data

result = colorFrame->CopyConvertedFrameDataToArray(colorBuffer.size() * sizeof(RGBQUAD), reinterpret_cast(&colorBuffer[0]), ColorImageFormat::ColorImageFormat_Bgra);

if (FAILED(result)){

throw std::exception("Exception : IColorFrame::CopyConvertedFrameDataToArray()");

}

}

SafeRelease(colorFrame);

// Acquire Latest Depth Frame

IDepthFrame* depthFrame = nullptr;

result = depthReader->AcquireLatestFrame(&depthFrame);

if (SUCCEEDED(result)){

// Retrieved Depth Data

result = depthFrame->CopyFrameDataToArray(depthBuffer.size(), &depthBuffer[0]);

if (FAILED(result)){

throw std::exception("Exception : IDepthFrame::CopyFrameDataToArray()");

}

}

SafeRelease(depthFrame);

// Acquire Latest Infrared Frame

IInfraredFrame* infraredFrame = nullptr;

result = infraredReader->AcquireLatestFrame(&infraredFrame);

if (SUCCEEDED(result)){

// Retrieved Infrared Data

result = infraredFrame->CopyFrameDataToArray(infraredBuffer.size(), &infraredBuffer[0]);

if (FAILED(result)){

throw std::exception("Exception : IInfraredFrame::CopyFrameDataToArray()");

}

}

SafeRelease(infraredFrame);

lock.unlock();

if (signal_PointXYZ->num_slots() > 0){

signal_PointXYZ->operator()(convertDepthToPointXYZ(&depthBuffer[0]));

}

if (signal_PointXYZI->num_slots() > 0){

signal_PointXYZI->operator()(convertInfraredDepthToPointXYZI(&infraredBuffer[0], &depthBuffer[0]));

}

if (signal_PointXYZRGB->num_slots() > 0){

signal_PointXYZRGB->operator()(convertRGBDepthToPointXYZRGB(&colorBuffer[0], &depthBuffer[0]));

}

if (signal_PointXYZRGBA->num_slots() > 0){

signal_PointXYZRGBA->operator()(convertRGBADepthToPointXYZRGBA(&colorBuffer[0], &depthBuffer[0]));

}

}

}

pcl::PointCloud::Ptr pcl::Kinect2Grabber::convertDepthToPointXYZ(UINT16* depthBuffer)

{

pcl::PointCloud::Ptr cloud(new pcl::PointCloud());

cloud->width = static_cast(depthWidth);

cloud->height = static_cast(depthHeight);

cloud->is_dense = false;

cloud->points.resize(cloud->height * cloud->width);

pcl::PointXYZ* pt = &cloud->points[0];

for (int y = 0; y < depthHeight; y++){

for (int x = 0; x < depthWidth; x++, pt++){

pcl::PointXYZ point;

DepthSpacePoint depthSpacePoint = { static_cast(x), static_cast(y) };

UINT16 depth = depthBuffer[y * depthWidth + x];

// Coordinate Mapping Depth to Camera Space, and Setting PointCloud XYZ

CameraSpacePoint cameraSpacePoint = { 0.0f, 0.0f, 0.0f };

mapper->MapDepthPointToCameraSpace(depthSpacePoint, depth, &cameraSpacePoint);

point.x = cameraSpacePoint.X;

point.y = cameraSpacePoint.Y;

point.z = cameraSpacePoint.Z;

*pt = point;

}

}

return cloud;

}

pcl::PointCloud::Ptr pcl::Kinect2Grabber::convertInfraredDepthToPointXYZI(UINT16* infraredBuffer, UINT16* depthBuffer)

{

pcl::PointCloud::Ptr cloud(new pcl::PointCloud());

cloud->width = static_cast(depthWidth);

cloud->height = static_cast(depthHeight);

cloud->is_dense = false;

cloud->points.resize(cloud->height * cloud->width);

pcl::PointXYZI* pt = &cloud->points[0];

for (int y = 0; y < depthHeight; y++){

for (int x = 0; x < depthWidth; x++, pt++){

pcl::PointXYZI point;

DepthSpacePoint depthSpacePoint = { static_cast(x), static_cast(y) };

UINT16 depth = depthBuffer[y * depthWidth + x];

// Setting PointCloud Intensity

point.intensity = static_cast(infraredBuffer[y * depthWidth + x]);

// Coordinate Mapping Depth to Camera Space, and Setting PointCloud XYZ

CameraSpacePoint cameraSpacePoint = { 0.0f, 0.0f, 0.0f };

mapper->MapDepthPointToCameraSpace(depthSpacePoint, depth, &cameraSpacePoint);

point.x = cameraSpacePoint.X;

point.y = cameraSpacePoint.Y;

point.z = cameraSpacePoint.Z;

*pt = point;

}

}

return cloud;

}

pcl::PointCloud::Ptr pcl::Kinect2Grabber::convertRGBDepthToPointXYZRGB(RGBQUAD* colorBuffer, UINT16* depthBuffer)

{

pcl::PointCloud::Ptr cloud(new pcl::PointCloud());

cloud->width = static_cast(depthWidth);

cloud->height = static_cast(depthHeight);

cloud->is_dense = false;

cloud->points.resize(cloud->height * cloud->width);

pcl::PointXYZRGB* pt = &cloud->points[0];

for (int y = 0; y < depthHeight; y++){

for (int x = 0; x < depthWidth; x++, pt++){

pcl::PointXYZRGB point;

DepthSpacePoint depthSpacePoint = { static_cast(x), static_cast(y) };

UINT16 depth = depthBuffer[y * depthWidth + x];

// Coordinate Mapping Depth to Color Space, and Setting PointCloud RGB

ColorSpacePoint colorSpacePoint = { 0.0f, 0.0f };

mapper->MapDepthPointToColorSpace(depthSpacePoint, depth, &colorSpacePoint);

int colorX = static_cast(std::floor(colorSpacePoint.X + 0.5f));

int colorY = static_cast(std::floor(colorSpacePoint.Y + 0.5f));

if ((0 <= colorX) && (colorX < colorWidth) && (0 <= colorY) && (colorY < colorHeight)){

RGBQUAD color = colorBuffer[colorY * colorWidth + colorX];

point.b = color.rgbBlue;

point.g = color.rgbGreen;

point.r = color.rgbRed;

}

// Coordinate Mapping Depth to Camera Space, and Setting PointCloud XYZ

CameraSpacePoint cameraSpacePoint = { 0.0f, 0.0f, 0.0f };

mapper->MapDepthPointToCameraSpace(depthSpacePoint, depth, &cameraSpacePoint);

if ((0 <= colorX) && (colorX < colorWidth) && (0 <= colorY) && (colorY < colorHeight)){

point.x = cameraSpacePoint.X;

point.y = cameraSpacePoint.Y;

point.z = cameraSpacePoint.Z;

}

*pt = point;

}

}

return cloud;

}

pcl::PointCloud::Ptr pcl::Kinect2Grabber::convertRGBADepthToPointXYZRGBA(RGBQUAD* colorBuffer, UINT16* depthBuffer)

{

pcl::PointCloud::Ptr cloud(new pcl::PointCloud());

cloud->width = static_cast(depthWidth);

cloud->height = static_cast(depthHeight);

cloud->is_dense = false;

cloud->points.resize(cloud->height * cloud->width);

pcl::PointXYZRGBA* pt = &cloud->points[0];

for (int y = 0; y < depthHeight; y++){

for (int x = 0; x < depthWidth; x++, pt++){

pcl::PointXYZRGBA point;

DepthSpacePoint depthSpacePoint = { static_cast(x), static_cast(y) };

UINT16 depth = depthBuffer[y * depthWidth + x];

// Coordinate Mapping Depth to Color Space, and Setting PointCloud RGBA

ColorSpacePoint colorSpacePoint = { 0.0f, 0.0f };

mapper->MapDepthPointToColorSpace(depthSpacePoint, depth, &colorSpacePoint);

int colorX = static_cast(std::floor(colorSpacePoint.X + 0.5f));

int colorY = static_cast(std::floor(colorSpacePoint.Y + 0.5f));

if ((0 <= colorX) && (colorX < colorWidth) && (0 <= colorY) && (colorY < colorHeight)){

RGBQUAD color = colorBuffer[colorY * colorWidth + colorX];

point.b = color.rgbBlue;

point.g = color.rgbGreen;

point.r = color.rgbRed;

point.a = color.rgbReserved;

}

// Coordinate Mapping Depth to Camera Space, and Setting PointCloud XYZ

CameraSpacePoint cameraSpacePoint = { 0.0f, 0.0f, 0.0f };

mapper->MapDepthPointToCameraSpace(depthSpacePoint, depth, &cameraSpacePoint);

if ((0 <= colorX) && (colorX < colorWidth) && (0 <= colorY) && (colorY < colorHeight)){

point.x = cameraSpacePoint.X;

point.y = cameraSpacePoint.Y;

point.z = cameraSpacePoint.Z;

}

*pt = point;

}

}

return cloud;

}

}

#endif KINECT2_GRABBER